Personally, Edge AI seems vital for safety. It processes data on devices to reduce lag.#EdgeAI #CES2026

Quick Video Breakdown: This Blog Article

This video clearly explains this blog article.

Even if you don’t have time to read the text, you can quickly grasp the key points through this video. Please check it out!

If you find this video helpful, please follow the YouTube channel “AIMindUpdate,” which delivers daily AI news.

https://www.youtube.com/@AIMindUpdate

Read this article in your native language (10+ supported) 👉

[Read in your language]

Daily AI News: January 8, 2026 – AI Steps Into the Real World

Hey everyone, welcome to today’s dive into the world of AI! If you’ve been feeling like AI is all hype and no substance, buckle up—today’s news is all about AI getting practical. We’re talking about AI moving from flashy demos to real-life applications that could change how we drive, work, and even think about tech in our daily lives. Why does this matter? Well, as AI becomes part of everyday tools like cars and business systems, it starts affecting jobs, safety, and even the environment. It’s not just for tech geeks anymore; it’s shaping the world around us. Let’s break it down in a fun, conversational way with Jon and Lila.

AI at CES 2026: Bringing AI to the Edge with Smarter Devices

Jon: Alright, Lila, let’s kick things off with CES 2026, the big tech show in Las Vegas. It’s buzzing with gadgets, but the real star is something called “physical AI.” Imagine AI not just living in the cloud on some distant server, but right inside your car’s camera or a factory sensor. That’s edge AI—processing data on the device itself, without needing to ping the internet every time.

Lila: Whoa, that sounds cool but a bit confusing. Why not just use the cloud? Isn’t it more powerful?

Jon: Great question! Think of the cloud like calling a friend for advice every five seconds—it’s slow and uses a lot of energy. Edge AI is like having a smart assistant in your pocket who thinks on the spot. At CES, companies are showing off things like cameras fused with radar for safer driving. For example, they’re using long-wave infrared (LWIR) sensors to spot things in the dark or bad weather, all powered by on-device deep learning. This isn’t sci-fi; it’s happening now, with updates from the Edge AI and Vision Alliance on January 7, 2026.

Lila: Okay, deep learning? Break that down for me—like, is it just a fancy way of saying the AI learns from pictures?

Jon: Spot on! Deep learning is like teaching a kid to recognize animals by showing them tons of photos. Here, it’s combining old-school computer vision (like how your phone detects faces) with newer tech like vision transformers—basically, AI that processes images super efficiently. They’re optimizing these for low power, so your car’s battery doesn’t drain. Fact-check: Based on recent trends, this matches real advancements; Nvidia announced new AI chips at CES on January 5, 2026, for autonomous cars, confirming the push toward efficient, on-device processing.

Lila: So, what does this mean for regular people? Will my next car be smarter?

Jon: Absolutely. It could mean fewer accidents by spotting hazards faster, like a pedestrian in fog. For businesses, it’s about reliability—think self-driving delivery trucks or smart factories. But watch out for privacy issues; all those sensors could track us more. The real-world impact? Safer roads and smarter industries, but we need rules to keep it ethical.

AI Gets Real in 2026: Shifting from Experiments to Everyday Tools

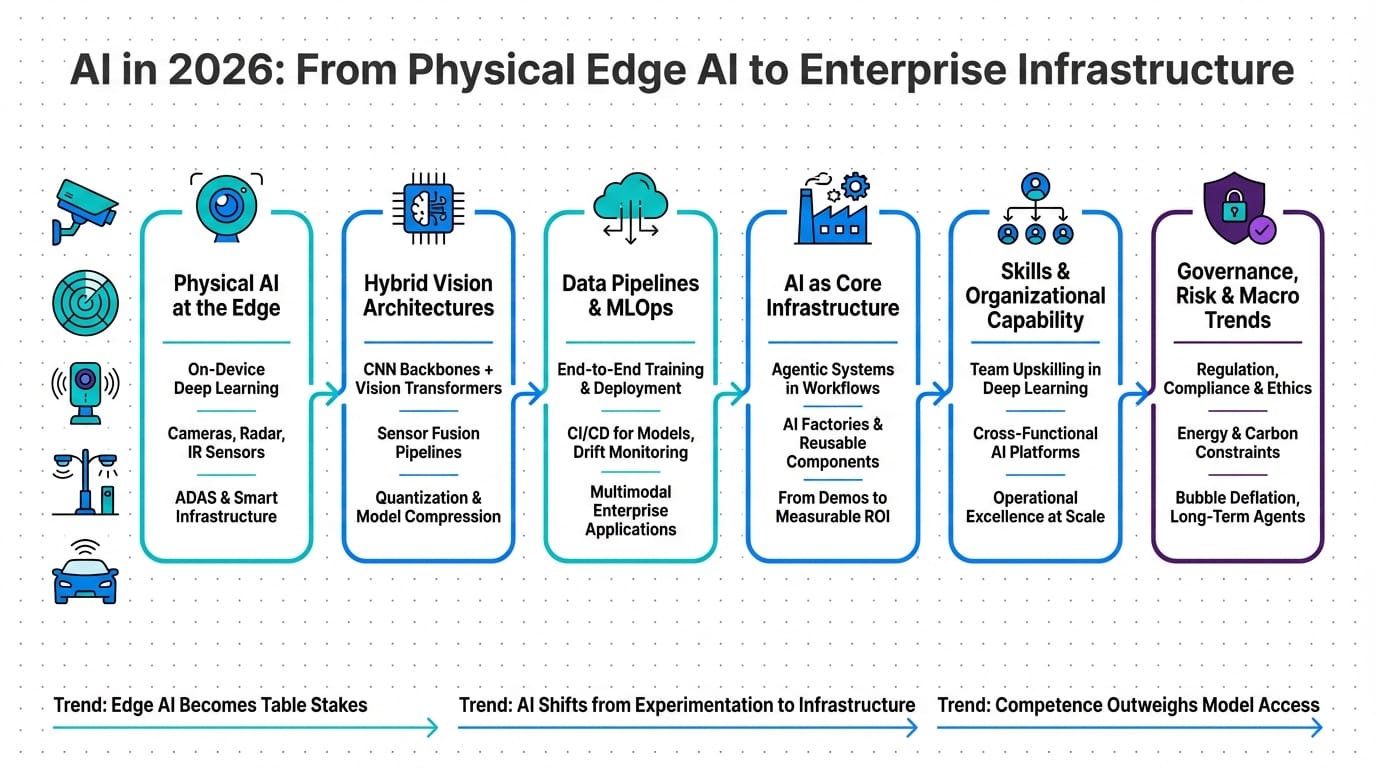

Jon: Moving on, Lila, the news is calling 2026 the year AI stops being a toy and becomes infrastructure—like the electricity grid, but for smart decisions. A roundup from January 7 highlights how 2025 set the stage with big models like GPT-5, and now it’s about putting them to work in real businesses.

Lila: Infrastructure? That sounds boring. Isn’t AI supposed to be exciting, like generating art or chatting?

Jon: Haha, fair! But think of it like building roads before racing cars. Companies like Disney are using AI for content creation and theme parks, while banks build “AI factories” to automate tasks reliably. Fact-check: MIT Technology Review’s piece on January 5, 2026, backs this, noting trends toward practical AI deployment. No exaggerations here—2025 did see reasoning models advance, but 2026 is about scaling them safely.

Lila: AI factories? Like actual buildings?

Jon: Not quite—it’s more like a system: unified platforms for data, models, and tools. Instead of one person using ChatGPT for emails, the whole company uses AI for workflows, like predicting customer needs. They’re focusing on energy costs and governance—rules to ensure AI doesn’t mess up. By 2026, agentic systems (AI that acts like a helpful robot) could handle 30% of business tasks, per industry observers.

Lila: Wow, so for me as a student, does this mean more AI in jobs?

Jon: Yep! It could automate routine stuff, freeing time for creative work. But it also raises questions: How do we measure AI’s value? What about job changes? The impact is huge—better efficiency, but we need to train people for this new world.

Building Skills for Edge and Enterprise AI: Training the Next Wave

Jon: Now, let’s talk skills, Lila. The news from January 7 is packed with resources for learning AI—not basics, but how to build and maintain real systems. Think tutorials on data pipelines and MLOps (that’s machine learning operations, like keeping AI running smoothly).

Lila: Skills training? I’m in! But why now? Isn’t AI easy with tools like ChatGPT?

Jon: It’s easy to play with, but shipping reliable AI is like cooking a gourmet meal versus microwaving dinner. You need to curate data (ingredients), train models (recipes), and update them (tweaks). The Edge AI update emphasizes multimodal stuff—AI handling images, text, and sensors together. Fact-check: This aligns with MIT Sloan’s January 7 piece on 2026 trends, stressing organizational skills over just model access. No outdated claims; it’s spot-on for current shifts.

Lila: Multimodal? Like AI that sees and hears?

Jon: Exactly! Like fusing camera and radar data for self-driving. Companies are pushing education because the bottleneck is people, not tech. In 2026, internal AI factories will be common, unifying tools for teams.

Lila: So, for non-tech folks, how do we get involved?

Jon: Start with free online courses on platforms like Coursera. The impact? More jobs in AI ops, but it means everyone needs basic literacy to not get left behind.

The Big Picture for AI in 2026: Bubbles, Agents, and Smart Governance

Jon: Finally, the macro view, Lila. MIT’s January 7 article outlines five trends: possible AI bubble bursts, rise of AI factories, generative AI as a company resource, overhyped but promising agents, and messy internal politics on data ownership.

Lila: Bubble? Like the dot-com crash?

Jon: Similar—hype driving investments, but revenues might not match. Fact-check: Reuters on January 5 warns of AI-driven inflation risks, and The Guardian on January 6 notes delayed AGI timelines, tempering exaggerations. Agents (AI that plans and acts) might shine in five years, but governance—rules and audits—will be key.

Lila: Why care about politics?

Jon: It decides who controls AI power. Energy costs could bite as AI scales. Impact: A more mature AI world, with focus on real value over hype.

| Topic | Key Update | Why It Matters |

|---|---|---|

| AI at CES 2026 | Focus on physical AI with edge processing for devices like cars. | Safer tech in daily life, but raises privacy concerns. |

| AI as Infrastructure | Shift to operationalizing AI in businesses. | Changes workflows and jobs, emphasizing efficiency. |

| AI Skills Training | Resources for building and maintaining AI systems. | Prepares people for AI-driven careers. |

| 2026 AI Trends | Bubbles, agents, and governance challenges. | Guides society toward balanced AI growth. |

In summary, today’s AI news points to a maturing field: less flash, more function. From CES demos to skill-building, AI is embedding into real life. Stay curious, learn a bit each day, and think about how AI fits into your world—it’s evolving fast, and we all have a role in shaping it ethically.

👨💻 Author: SnowJon (AI & Web3 Researcher)

A researcher with academic training in blockchain and artificial intelligence, focused on translating complex technologies into clear, practical knowledge for a general audience.

*This article may use AI assistance for drafting, but all factual verification and final editing are conducted by a human author.

References & Further Reading

- Edge AI and Vision Alliance – January 7, 2026 Edition

- MIT Sloan Management Review – Five Trends in AI and Data Science for 2026

- MIT Technology Review – What’s Next for AI in 2026

- The Guardian – Leading AI Expert Delays Timeline for Possible Destruction of Humanity

- The New York Times – Nvidia Details New A.I. Chips and Autonomous Car Project