How is AI changing the physical world? Discover daily updates on agentic AI controlling robots and more.

—#AI #Robotics #DailyAINews

—

Quick Video Breakdown: This Blog Article

This video clearly explains this blog article.

Even if you don’t have time to read the text, you can quickly grasp the key points through this video. Please check it out!

If you find this video helpful, please follow the YouTube channel “AIMindUpdate,” which delivers daily AI news.

https://www.youtube.com/@AIMindUpdate

Read this article in your native language (10+ supported) 👉

[Read in your language]

Daily AI News: Bridging the Gap Between Digital Brains and Real-World Robots

Hey everyone, welcome back to our daily dive into the world of AI! Today, we’re zooming in on a game-changing trend: the fusion of AI agents (those smart software thinkers) with physical robotics. Imagine if your virtual assistant could not only chat with you but also control a robot to fetch your coffee or assemble a gadget on a factory floor. That’s the exciting shift happening right now, and it’s set to revolutionize industries like manufacturing, logistics, and even everyday life. Why does this matter? In a world where AI is everywhere, making it interact seamlessly with the physical world means more efficient work, safer operations, and innovations that feel like science fiction coming true. Stick around as Jon and Lila break it down in simple terms!

Fujitsu Launches Kozuchi Physical AI 1.0: Merging Robots with Smart AI Agents

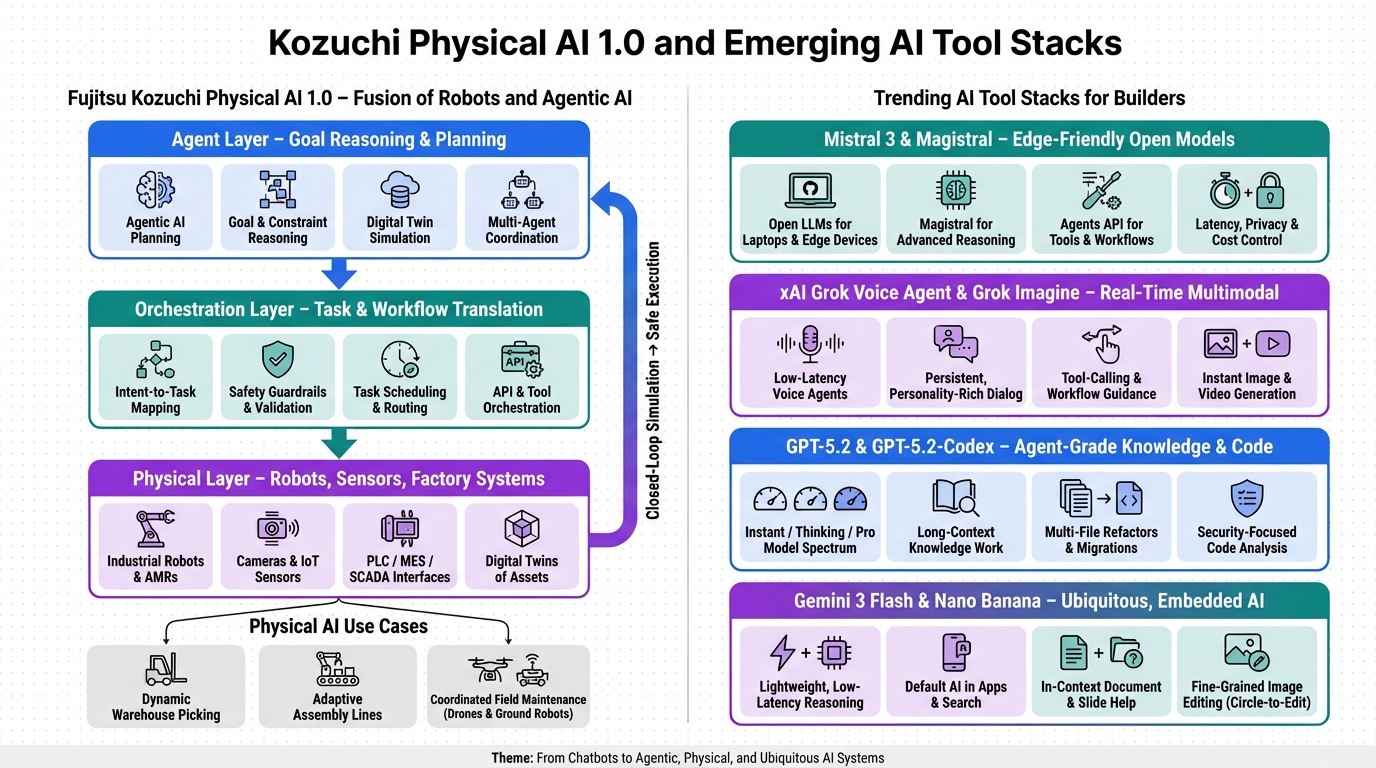

Jon: Alright, Lila, let’s kick things off with the big news from Japan. Fujitsu just launched something called Kozuchi Physical AI 1.0. It’s not just another AI model; it’s a platform that connects clever software agents with real robots. Think of it like giving your phone’s voice assistant the ability to control a robotic arm in a factory.

Lila: Whoa, that sounds futuristic! But break it down for me – what’s an “agentic AI” and why is Fujitsu mixing it with robots? I’m imagining robots taking over the world, but I know that’s probably hype.

Jon: Haha, yeah, let’s roast that hype a bit – no robot apocalypse here. Agentic AI means software that can think, plan, and act on its own, like a virtual helper that doesn’t just answer questions but makes decisions and executes tasks. Fujitsu’s platform stacks three layers: high-level agents that plan (like a brain deciding what to do), a middle layer that turns plans into specific actions, and a bottom layer that talks to actual robots or sensors. They use digital twins – virtual copies of real machines – to test everything safely in a simulation before it happens in the real world.

Lila: Okay, like practicing a dance in your head before doing it on stage? That makes sense for safety. But is this real? I’ve heard of companies like Nvidia talking about similar stuff.

Jon: Spot on! Fact-checking this: Based on recent announcements, Fujitsu partnered with Nvidia to make this happen, and it’s indeed launched as of December 24, 2025. It’s not exaggerated – it’s building on existing tech like robotics APIs and AI models. No wild claims; it’s about standardizing how AI controls physical systems without tons of custom coding.

Lila: So, what does this mean for everyday people? Will I see this in my job or home?

Jon: Great question. For manufacturers, it could mean smarter warehouses where robots reroute themselves based on live data, or assembly lines that fix issues on the fly. Imagine a drone and a ground robot teaming up for maintenance without human micromanagement. In your life, it might lead to better delivery services or even home robots that adapt to your needs. The impact? More efficiency, fewer errors, and jobs shifting toward oversight rather than manual labor. This is a step toward “physical AI” going mainstream, especially in industries like logistics.

Mistral 3 and Magistral: Open AI Models That Run Anywhere

Jon: Moving on, let’s chat about Mistral’s latest – the Mistral 3 family and Magistral. These are open-source AI models designed to work on everything from laptops to drones, without needing massive cloud servers.

Lila: Open-source? Like free recipes anyone can use? And why “edge-friendly” – does that mean it works offline?

Jon: Exactly! Think of it like a cookbook that’s open for anyone to tweak. Edge-friendly means these models run on devices at the “edge” of the network, like your phone or a factory sensor, not always phoning home to a distant server. Fact-check: Mistral has been releasing models like this, but Mistral 3 is a 2025 evolution, tuned for reasoning tasks. Magistral handles complex thinking, and there’s an Agents API to connect them to tools. It’s not locked to big companies; you can deploy it locally for privacy and speed.

Lila: Cool analogy – like cooking at home instead of ordering takeout every time. But is it as powerful as the big names like GPT?

Jon: It’s getting there – aiming for 80-90% capability with more control. No exaggerations in the claims; it’s practical for industrial systems or EU data rules. Real-world use: Building custom AI for offline environments, like field tech or secure internal tools.

Lila: So what? How does this affect me if I’m not a developer?

Jon: It democratizes AI – cheaper, more private tools mean apps that work faster without sharing your data. For students or hobbyists, it’s easier to experiment. Overall, it pushes back against big tech monopolies, leading to more innovation in everyday apps.

xAI’s Grok Voice Agent and Grok Imagine: Talking AI That Creates on the Fly

Jon: Next up, xAI’s Grok is making waves with its voice agent API and Grok Imagine for image/video generation.

Lila: Voice agent? Like Siri on steroids? And what’s the “personality” angle?

Jon: Yep, imagine a chatty assistant that talks back in real-time, triggers actions, and even generates images from your words. Fact-check: xAI, from Elon Musk, has been updating Grok, and this 2025 version focuses on low-latency voice and multimodal creation. It’s not magic, but the bundling makes it developer-friendly.

Lila: Like a LEGO set where pieces snap together easily? Why is latency a big deal?

Jon: Latency is the delay – low means conversations feel natural, like talking to a friend. Use cases: In-car helpers, game characters, or creative tools where you speak an idea and see visuals instantly.

Lila: Impact for non-techies?

Jon: Smoother AI in daily life, like better customer service bots or fun apps for creators. It could make AI feel more human, changing how we interact with tech.

GPT-5.2 and GPT-5.2-Codex: Smarter AI for Work and Coding

Jon: OpenAI’s GPT-5.2 series is out, with versions for quick tasks, deep thinking, and coding via Codex.

Lila: GPT again? What’s new here?

Jon: It’s an upgrade for reliability – handles longer contexts, fewer errors. Fact-check: Building on GPT-4, this 2025 iteration focuses on agent-style work, like managing projects or scanning code for bugs. Codex is for devs, spotting vulnerabilities in big codebases.

Lila: Like a super proofreader for code? Analogy?

Jon: Yes, like an eagle-eyed editor who reads the whole book at once. Great for tedious tasks, shifting human focus to creativity.

Lila: Why it matters?

Jon: Boosts productivity in offices and dev teams, making tools like spreadsheets or code reviews faster. For society, it means AI handling more routine work, freeing us for bigger ideas.

Gemini 3 Flash and Nano Banana: Google’s Everyday AI Magic

Jon: Finally, Google’s Gemini 3 Flash and Nano Banana for fast AI and image editing.

Lila: Nano Banana? Sounds fun! What’s the scoop?

Jon: Flash is a speedy model for quick tasks, baked into Google apps. Nano Banana lets you edit images by circling and describing changes. Fact-check: Google’s advancing Gemini in 2025, emphasizing ubiquity over raw power.

Lila: Like editing a photo with a magic wand? Everywhere integration?

Jon: Precisely – AI in Search, Workspace, Android. Makes help ambient, not a separate app.

Lila: Real-world impact?

Jon: AI becomes invisible infrastructure, speeding up tasks like editing or searching. For users, it’s seamless help in daily digital life.

| Topic | Key Update | Why It Matters |

|---|---|---|

| Fujitsu Kozuchi Physical AI 1.0 | Platform fusing agentic AI with robotics for seamless control. | Brings AI into physical world, boosting efficiency in industries. |

| Mistral 3 & Magistral | Open models for edge devices with reasoning focus. | More accessible, private AI for offline and custom uses. |

| xAI’s Grok Tools | Voice API and image generation for real-time interaction. | Makes AI feel natural, enhancing apps and creativity. |

| GPT-5.2 Series | Upgraded for reliable knowledge work and coding. | Shifts routine tasks to AI, freeing humans for innovation. |

| Gemini 3 Flash & Nano Banana | Fast AI and intuitive image editing integrated everywhere. | Makes AI ubiquitous, simplifying daily digital tasks. |

As we wrap up today’s AI news, it’s clear the direction is toward more integrated, practical AI – from robots in factories to tools on your phone. These updates show AI evolving from chatbots to real-world helpers. Stay curious, think about how this tech shapes society, and keep learning! What do you think – exciting or a bit overwhelming? Drop your thoughts below.

👨💻 Author: SnowJon (AI & Web3 Researcher)

A researcher with academic training in blockchain and artificial intelligence, focused on translating complex technologies into clear, practical knowledge for a general audience.

*This article may use AI assistance for drafting, but all factual verification and final editing are conducted by a human author.

References & Further Reading

- Fujitsu Press Release on Kozuchi Physical AI 1.0

- Jason Wade on Key AI Developments

- Shelly Palmer on AI in December 2025

- Fujitsu and Nvidia on Agentic AI and Robotics

- NVIDIA on AI Advances in 2025