Personally, the sheer speed of chips at CES 2026 suggests local AI will soon be standard.#CES2026 #AIHardware

Quick Video Breakdown: This Blog Article

This video clearly explains this blog article.

Even if you don’t have time to read the text, you can quickly grasp the key points through this video. Please check it out!

If you find this video helpful, please follow the YouTube channel “AIMindUpdate,” which delivers daily AI news.

https://www.youtube.com/@AIMindUpdate

Read this article in your native language (10+ supported) 👉

[Read in your language]

Daily AI News Roundup: Exciting Advances from CES 2026 and Beyond

Hey everyone, welcome to today’s dive into the world of AI! If there’s one big trend stealing the spotlight right now, it’s the explosion of AI hardware and tools unveiled at CES 2026. We’re talking about powerful new chips and platforms that could make AI faster, smarter, and more accessible in our daily lives. Why does this matter? Well, these updates aren’t just tech geek stuff—they could mean quicker AI assistants on your phone, robots helping in factories, and even better ways to create videos or chat with machines. In a world where AI is popping up everywhere from work to home, staying in the know helps us all understand how it’s shaping our future. Let’s break it down together with Jon and Lila.

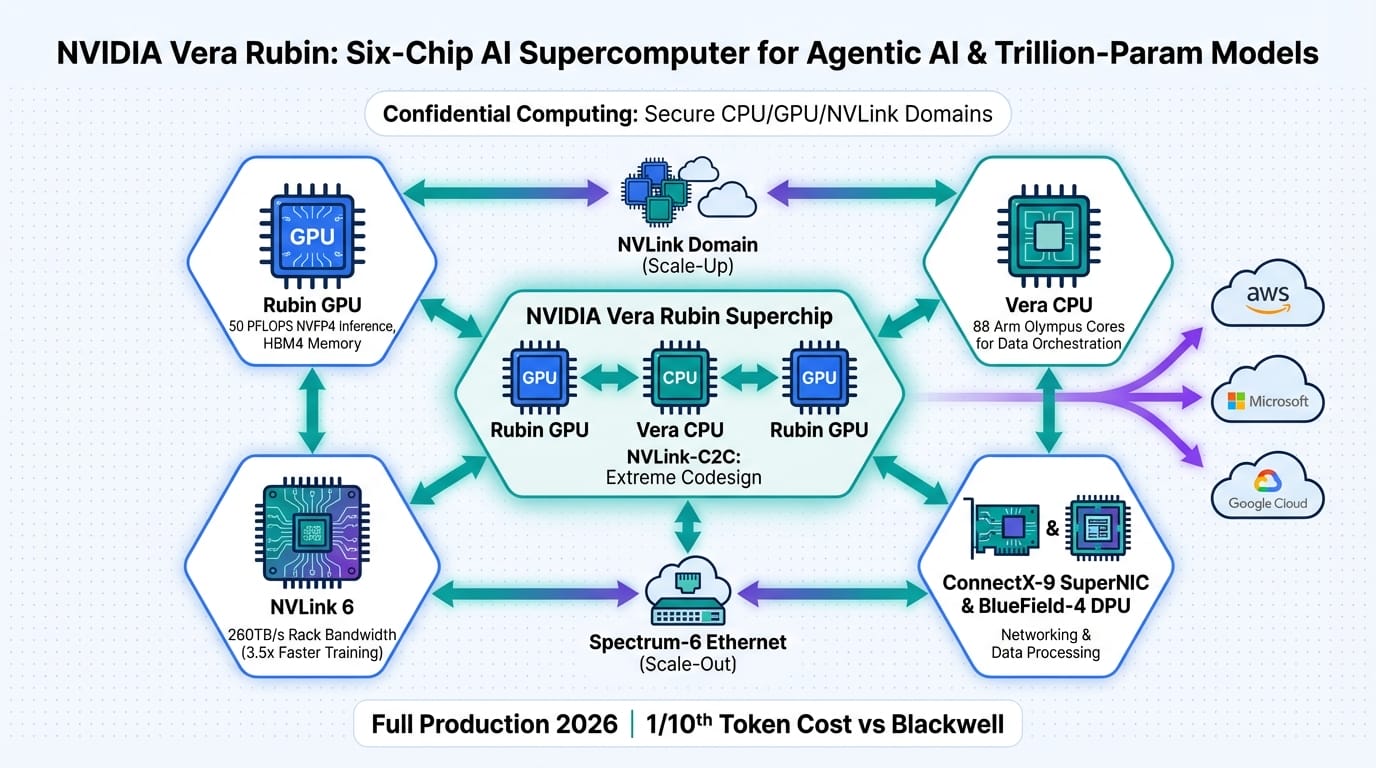

NVIDIA Unveils Vera Rubin AI Platform at CES 2026

Jon: Alright, Lila, let’s start with the star of CES 2026—NVIDIA’s new Vera Rubin platform. Named after the famous astronomer who helped discover dark matter, this is their next big leap in AI hardware, building right on top of their previous Blackwell system. It’s designed to handle massive AI models with trillions of parameters, thanks to new H300 GPUs and huge boosts in memory and processing speed. They’re aiming for full production later this year, keeping NVIDIA ahead in the race for top AI tech.

Lila: Whoa, trillions of parameters? That sounds huge. Can you explain what that means in simple terms, like why it’s a big deal for someone like me who just uses AI for chatting or editing photos?

Jon: Absolutely! Think of an AI model like a super-smart brain. Parameters are like the connections in that brain—the more there are, the smarter and more capable it gets. Vera Rubin is built to train and run these giant brains without slowing down, with insane memory bandwidth that could double efficiency every few months, outpacing old tech rules like Moore’s Law. It’s got custom chips for specific needs, like building AI for healthcare or self-driving cars. Fact-check wise, based on the latest reports, this isn’t hype—NVIDIA confirmed at CES that it’s set for real-world use in 2026, with partners like Mercedes already testing it for autonomous driving.

Lila: Okay, got it. So, what does this mean for everyday people? Will my next phone or laptop feel this impact?

Jon: Spot on. For you and me, it means faster AI tools that don’t drain power or cost a fortune. Businesses could deploy smarter chatbots or predictive systems cheaper, leading to things like personalized education apps or quicker medical diagnoses. On a bigger scale, countries building their own AI infrastructure—called sovereign AI—will use this to stay competitive. The real-world win? Expect AI models like the next version of GPT to evolve quicker, making tech more helpful in work and life without breaking the bank.

AMD Expands Ryzen AI 400 Series at CES for On-Device Power

Jon: Next up, AMD is firing back at CES with their Ryzen AI 400 series laptops. These come with upgraded Neural Processing Units (NPUs)—special chips for AI tasks—that make things like real-time translation or generating content super smooth right on your device. They’re also teasing Turin chips for data centers to compete in big-scale AI training.

Lila: NPUs? Break that down— is this like having a mini AI brain in my laptop?

Jon: Exactly! Imagine your laptop as a car: the CPU is the engine for general tasks, but the NPU is like a turbo booster just for AI stuff. The Ryzen AI 400 boosts this for on-device power, meaning no need to send data to the cloud for things like editing videos or live language translation. Reports from CES confirm superior performance per watt, which saves energy and money. It’s not just talk—AMD is positioning this to challenge Intel and NVIDIA in both consumer gadgets and enterprise servers.

Lila: Cool, so no more waiting for cloud responses? How does this change things for students or remote workers?

Jon: Huge difference! For students, imagine translating lectures in real-time or generating study notes without internet lags. Remote workers get privacy-focused AI that runs locally, reducing data leak risks. In the bigger picture, it pressures competitors to innovate, leading to more affordable AI hardware. The impact? More people can experiment with AI tools without high costs, democratizing tech for small businesses and hobbyists.

Hyundai’s AI+Robotics Roadmap Targets Human-Centered Bots

Jon: Shifting to robots, Hyundai shared their AI and robotics plans at CES, focusing on bots that use large language models (LLMs) for natural interactions in logistics and home help. They’re teaming up more with Boston Dynamics for better navigation and dexterity, creating modular robots that adapt to real-world messiness.

Lila: Modular robots? Like LEGO bots? And what’s an LLM in this context?

Jon: Haha, yes, like customizable LEGO sets! You can swap parts for different jobs. LLMs are the AI brains behind chat tools like ChatGPT, here used for robots to understand and respond to human commands naturally. Fact-checking from CES coverage, this builds on Hyundai’s ownership of Boston Dynamics, with reinforcement learning letting bots learn from trial and error. It’s not sci-fi—prototypes are already handling factory tasks or home assistance.

Lila: That sounds helpful, but scary for jobs. What’s the real impact?

Jon: Fair point. It automates repetitive work, freeing humans for creative roles, but yes, it could shift job markets—think oversight instead of manual labor. For society, safer factories and elderly care bots could improve lives. Hyundai’s push means more practical robots soon, making AI feel more “human” in daily interactions.

MIT RLM and DeepSeek MHC Shake Up Open-Source Models

Jon: In model news, MIT’s Recursive Language Model (RLM) and DeepSeek’s MHC are big in open-source AI. RLM uses a recursive structure for better reasoning, while MHC boosts data flow by 400% with custom tweaks, making smaller models run like giants on everyday hardware.

Lila: Recursive? Like a loop? Help me visualize this.

Jon: Think of it like a story that builds on itself—RLM processes info in loops for deeper thinking, unlike straight-line models. DeepSeek optimizes memory and GPUs for efficiency. Based on recent discussions, these are legit open-source advances, not overblown claims, matching closed models in some tasks. You can run them on laptops now!

Lila: So, why care if I’m not a developer?

Jon: It means free, powerful AI for anyone—students can build apps, startups innovate faster without big budgets. It closes the gap between tech giants and independents, fostering creativity and accessibility.

Ray3 Modify by Luma — Controlled Video Generation

Jon: Tool time! Luma’s Ray3 Modify lets you generate or edit videos while keeping motion, emotions, and timing intact—perfect for creators.

Lila: Like magic video editing? How?

Jon: Use reference images to swap elements seamlessly. It’s API-based and competes with tools like Runway by offering precise control. Great for marketing or films.

Lila: Impact for beginners?

Jon: Lowers the barrier—anyone can create pro-level videos, boosting creativity in education and social media.

Qira by Lenovo — Cross-Device AI Continuity

Jon: Lenovo’s Qira connects devices with AI from OpenAI and others for tasks like syncing notes or queries across gadgets.

Lila: Seamless workflow? Privacy how?

Jon: Privacy controls and local processing. Free with Lenovo hardware, it’s for pros needing multi-device magic.

Lila: Why matters?

Jon: Boosts productivity—students juggle studies, workers streamline tasks, making AI feel integrated into life.

| Topic | Key Update | Why It Matters |

|---|---|---|

| NVIDIA Vera Rubin | New platform for massive AI models, production in 2026 | Faster, cheaper AI for everyone, from apps to national tech |

| AMD Ryzen AI 400 | Upgraded NPUs for local AI on laptops | Private, efficient AI without cloud reliance |

| Hyundai AI Robotics | Modular bots with LLMs for real-world tasks | Automates jobs, improves safety and daily help |

| MIT RLM & DeepSeek MHC | Open-source models with efficiency boosts | Accessible AI for innovators and learners |

| Luma Ray3 Modify | Precise video gen and editing tool | Easier content creation for creators |

| Lenovo Qira | Cross-device AI for tasks and privacy | Seamless productivity in a connected world |

Wrapping up, today’s AI news from CES 2026 points to a future where hardware and tools make AI more powerful yet user-friendly. From chips powering global innovations to robots and apps in our hands, it’s all accelerating. Stay curious, think about how these changes affect you, and keep questioning the role of AI in society—it’s our world to shape!

👨💻 Author: SnowJon (AI & Web3 Researcher)

A researcher with academic training in blockchain and artificial intelligence, focused on translating complex technologies into clear, practical knowledge for a general audience.

*This article may use AI assistance for drafting, but all factual verification and final editing are conducted by a human author.

References & Further Reading

- CES 2026: Everything revealed, from Nvidia’s debuts to AMD’s new chips

- NVIDIA Rubin Platform, Open Models, Autonomous Driving: NVIDIA Presents Blueprint for the Future at CES

- Nvidia just unveiled Rubin – and it may transform AI computing as we know it

- CES 2026: AI everywhere-Nvidia’s Rubin, AMD’s Ryzen AI PCs, Alexa+, robots, and the weirdest gadgets

- Latest AI News and Updates from Crescendo.ai

▼ AI tools to streamline research and content production (free tiers may be available)

Free AI search & fact-checking

👉 Genspark

Recommended use: Quickly verify key claims and track down primary sources before publishing

Ultra-fast slides & pitch decks (free trial may be available)

👉 Gamma

Recommended use: Turn your article outline into a clean slide deck for sharing and repurposing

Auto-convert trending articles into short-form videos (free trial may be available)

👉 Revid.ai

Recommended use: Generate short-video scripts and visuals from your headline/section structure

Faceless explainer video generation (free creation may be available)

👉 Nolang

Recommended use: Create narrated explainer videos from bullet points or simple diagrams

Full task automation (start from a free plan)

👉 Make.com

Recommended use: Automate your workflow from publishing → social posting → logging → next-task creation

※Links may include affiliate tracking, and free tiers/features can change; please check each official site for the latest details.