When It Comes to AI, Bigger Isn’t Always Better

John: Hey everyone, welcome back to the blog! Today, we’re diving into a hot topic in the AI world: why bigger models aren’t always the way to go. I’ve been following this closely, especially with all the buzz around model sizes exploding in recent years. Lila, you’ve been curious about this too—what’s on your mind?

Lila: Hi John! Yeah, I’ve heard about these massive AI models with billions of parameters, like they’re the key to everything. But the title suggests otherwise. Can you break it down for us beginners? Why isn’t bigger always better?

John: Absolutely, Lila. It’s a common misconception that scaling up AI models—making them bigger with more parameters—automatically leads to better performance. But as we’ve seen from reports like the Stanford AI Index, while model performance has improved dramatically, energy efficiency gains are happening at about 40% per year, showing that size comes with trade-offs in cost, speed, and sustainability. If you’re into automation that ties into AI efficiency, our deep-dive on Make.com covers features, pricing, and use cases in plain English—worth a look for streamlining your workflows: Make.com (formerly Integromat) — Features, Pricing, Reviews, Use Cases.

The Basics of AI Model Size

Lila: Okay, start from the ground up. What exactly do we mean by “model size” in AI?

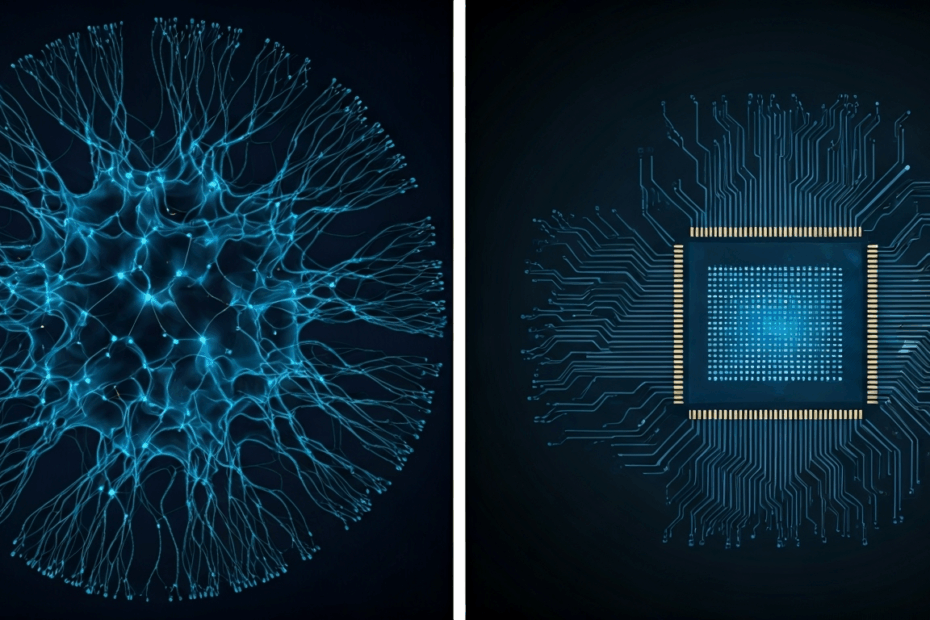

John: Great question! In simple terms, an AI model’s size refers to the number of parameters it has—these are like the adjustable knobs that the model learns from data. Think of it as the brain’s neurons: more parameters can mean more capacity to learn complex patterns. For example, models like GPT-4 have trillions of parameters, but according to a 2025 report from Nano-GPT, there’s a key trade-off between size and performance, including factors like cost and speed.

Lila: So, bigger models are smarter? But why the caveat?

John: Not necessarily “smarter” in every scenario. While larger models often excel in broad tasks, they can be overkill for specific jobs. A Forbes article from early 2025 highlights that trends like multi-model approaches are rising, where smaller, specialized models team up for better efficiency without the bloat.

Key Trade-Offs: Performance vs. Efficiency

Lila: Trade-offs sound important. Can you explain how size impacts performance and efficiency?

John: Sure thing. Bigger models can handle more nuanced tasks, but they require massive computational power, leading to higher costs and slower inference times—that’s the time it takes to generate a response. A 2025 benchmark report from AllAboutAI shows that while top models like those in the LLM leaderboards have doubled capabilities yearly, smaller models optimized with techniques like pruning and quantization often match or exceed them in efficiency for targeted uses, as noted in Netguru’s optimization guide.

Lila: Pruning and quantization? Those sound technical—break it down?

John: No worries! Pruning is like trimming dead branches from a tree—removing unnecessary parts of the model to make it leaner without losing much accuracy. Quantization reduces the precision of the numbers the model uses, say from 32-bit to 8-bit, which speeds things up and cuts memory use. These are huge in 2025 trends, helping models run on everyday devices instead of power-hungry servers.

- Cost Savings: Smaller models reduce cloud computing bills, vital as AI’s energy demands skyrocket.

- Speed: They process queries faster, ideal for real-time apps like chatbots.

- Environmental Impact: With AI’s carbon footprint growing, efficient models align with sustainable tech goals from reports like the Penn Wharton Budget Model.

- Accessibility: Easier deployment on edge devices, as per WebProNews’s 2025 AI trends.

Current Developments and 2025 Trends

Lila: What’s happening right now in 2025? Are there examples of smaller models winning out?

John: Definitely! Look at agentic AI systems, which are dominating headlines. A TechTarget piece from January 2025 points out that multimodal models—handling text, images, and more—are trending, but the shift is toward optimized, smaller versions. For instance, open-source models like those from the Hugging Face community are proving that you can achieve high performance with fewer parameters through fine-tuning. And according to SentiSight.ai, AI benchmarks in 2025 show compute scaling at 4.4x annually since 2010, but efficiency gains mean smaller models are closing the gap.

Lila: That makes sense for practical use. How does this affect industries?

John: In sectors like healthcare and finance, smaller models enable faster, on-device processing without compromising privacy—think federated learning, an emerging trend from ETC Journal in August 2025, where devices collaborate without sharing raw data. It’s a game-changer for privacy-preserving AI.

Challenges and Future Potential

Lila: Are there downsides to going smaller? And what’s next?

John: Challenges include potential accuracy drops if not optimized well, but tools are evolving. For future potential, we’re seeing integrations with quantum computing and sustainable energy, as per WebProNews. By 2035, AI could boost GDP by 1.5%, but only if we focus on efficient models, per Penn Wharton’s projections. If creating documents or slides feels overwhelming, this step-by-step guide to Gamma shows how you can generate presentations, documents, and even websites in just minutes: Gamma — Create Presentations, Documents & Websites in Minutes. It’s a prime example of how AI tools are getting smarter without needing gigantic models.

Lila: Quantum computing? That sounds futuristic—how does it tie in?

John: It’s about accelerating training for complex models, but even there, the trend is toward hybrid systems where size is balanced. Azilen’s 2025 stats show generative AI adoption soaring, with productivity gains up to 40% from agentic systems that use smaller, task-specific models.

FAQs: Your Burning Questions

Lila: Let’s wrap with some FAQs. What’s one myth about AI size?

John: Myth: Bigger always means better accuracy. Reality: For many tasks, mid-sized models fine-tuned on quality data outperform giants, as per TechTarget’s trends.

Lila: How can readers experiment with this?

John: Start with open-source tools—play around with models on platforms like Hugging Face. And if automation’s your thing, check out that Make.com guide again for integrating efficient AI into your projects: Make.com (formerly Integromat) — Features, Pricing, Reviews, Use Cases.

John’s Reflection: Wrapping up, it’s clear that in AI, efficiency often trumps sheer size—it’s about smarter design for real-world impact. As we head further into 2025, focusing on balanced models will drive innovation without the excess baggage. Stay curious, folks!

Lila’s Takeaway: Wow, this really flips the script for me—smaller can be mighty! Thanks, John; I’ll be thinking twice about those mega-models now.

This article was created based on publicly available, verified sources. References:

- When it comes to AI, bigger isn’t always better

- AI Model Size vs. Performance: Key Trade-Offs

- The 5 AI Trends In 2025: Agents, Open-Source, And Multi-Model

- AI model performance improvements show no signs of slowing down

- The Projected Impact of Generative AI on Future Productivity Growth

- AI Model Optimization Techniques for Enhanced Performance in 2025

- 8 AI and machine learning trends to watch in 2025

- 2025 AI Model Benchmark Report: Accuracy, Cost, Latency, SVI

- AI Benchmarks 2025: Performance Metrics Show Record Gains

- AI Trends Dominating Tech Innovation in 2025

- Five Emerging AI Trends in Late-August 2025

- Top Generative AI Statistics 2025: Adoption, Impact & Trends