Want to create an AI that *knows* your enterprise data? Nvidia’s NeMo Retriever can help! Get ready to revolutionize your RAG. #NvidiaNeMo #RAG #AI

🎧 Listen to the Audio

If you’re short on time, check out the key points in this audio version.

📝 Read the Full Text

If you prefer to read at your own pace, here’s the full explanation below.

Introducing Retrieval-Augmented Generation with NVIDIA NeMo Retriever

<strong John: Hey everyone, welcome back to the blog! I’m John, your go-to guy for breaking down the latest in AI tech. Today, we’re diving into something super exciting: Retrieval-Augmented Generation, or RAG for short, specifically with NVIDIA’s NeMo Retriever. I’ve got my friend Lila here, who’s always full of great questions that help us unpack these topics for beginners and enthusiasts alike. Lila, what’s on your mind about this?

Lila: Hi John! I’ve been hearing a lot about RAG lately, especially with NVIDIA’s tools. But honestly, it sounds a bit intimidating. Can you start from the basics? What exactly is Retrieval-Augmented Generation?

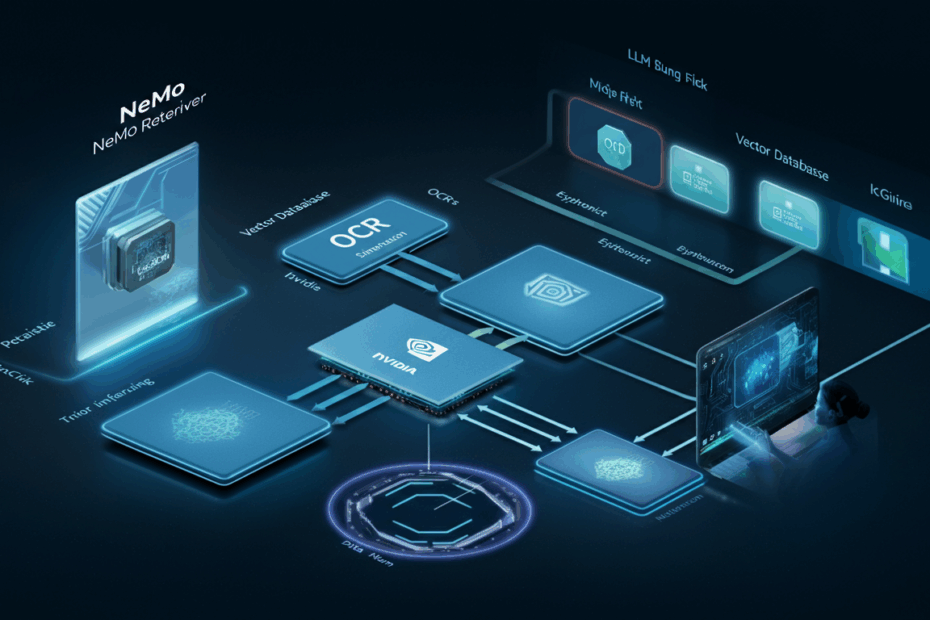

John: Absolutely, Lila. Let’s keep it simple. Imagine you’re chatting with an AI like ChatGPT, but instead of it just making stuff up from its training data, it quickly pulls in fresh, relevant info from a database or documents to make its answers more accurate and up-to-date. That’s RAG in a nutshell. It combines retrieval—grabbing the right info—with generation—creating a response. NVIDIA’s NeMo Retriever is a toolkit that makes this process smoother, especially for handling things like PDFs or enterprise data.

The Basics of RAG and Why It Matters

Lila: Okay, that analogy helps—like having a super-smart librarian who not only finds the book but summarizes it for you. But why is this a big deal now? I’ve seen it trending on X with NVIDIA announcements.

John: Spot on! RAG matters because large language models (LLMs) can sometimes “hallucinate”—that’s tech speak for giving wrong info confidently. RAG fixes that by grounding responses in real data. From what I’ve seen in recent NVIDIA updates, like their blog posts, NeMo Retriever is designed for enterprises to build these pipelines efficiently. For example, a recent InfoWorld article highlighted how it ingests PDFs and generates reports quickly, using a plan-reflect-refine approach to make the AI smarter.

Lila: Hallucinate? That’s funny. So, how does NeMo Retriever fit in? Is it just software, or does it need special hardware?

John: Great question. NeMo Retriever is part of NVIDIA’s AI ecosystem, optimized for their GPUs. It’s a set of microservices that handle the retrieval part—embedding models to search data, reranking to pick the best matches, and more. According to NVIDIA’s technical blog from a couple of weeks ago, it enhances RAG by adding reasoning to handle unclear queries, making it perfect for business use.

Key Features of NVIDIA NeMo Retriever

Lila: Microservices sound technical. Can you break down the key features? Maybe list them out so it’s easy to follow?

John: Sure thing! Here’s a quick list of standout features based on the latest from NVIDIA’s announcements and hands-on reviews:

- Embedding Models: These turn text into vectors for fast searching. NeMo’s version is optimized for accuracy and speed on a single GPU.

- Reranking: After retrieving info, it re-evaluates and picks the most relevant bits to avoid irrelevant noise.

- Multimodal Support: Handles not just text but images, charts, and more—super useful for complex docs, as per their March 2024 intro to multimodal RAG.

- Integration with NIMs: NVIDIA Inference Microservices make it production-ready, integrating with platforms like Cohesity or Snowflake for enterprise data.

- Agentic Pipelines: Builds smarter agents that plan and refine responses, from a July 2024 blog on Llama 3.1 integration.

Lila: Wow, that list makes it clearer. Multimodal—what’s that mean in practice? Like, can it read graphs from a PDF?

John: Exactly! Think of a financial report with charts. NeMo Retriever can extract and understand those visuals, not just the words. A recent post from July 2025 on ragaboutit.com talks about how NVIDIA’s Llama 3.2 NeMo Retriever is revolutionizing enterprise AI by handling multimodal data, reducing RAG failures from 80% in deployments.

Current Developments and Trends

Lila: Speaking of trends, I’ve seen X buzzing about NVIDIA’s latest updates. What’s new as of now—in August 2025?

John: From real-time searches, the buzz is on efficiency. A June 2025 article from blockchain.news highlighted how NeMo Retriever extracts multimodal data on a single GPU, cutting costs. Plus, Cohesity’s December 2024 blog shared results on improved recall in RAG using NeMo microservices. On X, verified accounts like @NVIDIAAI are posting about integrations with Llama models for agentic RAG, making AI more adaptive to vague queries.

Lila: Adaptive to vague queries? Like if I ask something unclear, it figures it out?

John: Yep! A two-week-old NVIDIA blog explains using Llama Nemotron models for reasoning in RAG pipelines. It adds a layer where the AI reflects and refines, boosting accuracy. Trends show enterprises using this for code development in high-performance computing, as per a May 2024 post.

Challenges and How NeMo Addresses Them

Lila: Sounds powerful, but are there challenges? I worry about data privacy or setup complexity for beginners.

John: Valid concerns. Challenges include ensuring data relevance, handling large datasets without high costs, and avoiding biases in retrieval. NeMo tackles this with efficient microservices—runs on one GPU, as noted in recent updates. For privacy, it’s designed for on-premises or secure cloud setups. An April 2024 explainer from NVIDIA stresses how RAG enhances responses without retraining models, keeping things secure and cost-effective.

Lila: And for industries like architecture or engineering? I saw something about AEC.

John: Right! A December 2024 NVIDIA guide for AEC (architecture, engineering, construction) shows RAG helping analyze blueprints or data analysis, making LLMs more practical there.

Future Potential and Getting Started

Lila: What’s next for this tech? Any predictions based on trends?

John: Looking ahead, with multimodal advancing, we might see RAG in AR/VR or real-time analytics. NVIDIA’s July 2024 announcement of NeMo Retriever NIMs points to broader adoption in platforms like NetApp. For getting started, check NVIDIA’s developer blogs—they have tutorials for building pipelines with Llama 3.1.

Lila: Awesome. Any FAQs you hear often?

John: Sure! Common ones: Is it free? NeMo has open-source elements, but enterprise features need NVIDIA hardware. How accurate? Up to 90% better recall per Cohesity’s tests. Scalable? Yes, for big data.

Wrapping It Up

John: In reflection, NVIDIA NeMo Retriever is making RAG accessible and powerful, turning AI from a novelty to a business essential. It’s exciting to see how it’s evolving with real-world integrations, grounded in solid tech from sources like NVIDIA’s own blogs.

Lila: My takeaway: RAG with NeMo feels like giving AI a memory boost—super practical for everyday use. Thanks, John!

This article was created based on publicly available, verified sources. References:

- Retrieval-augmented generation with Nvidia NeMo Retriever | InfoWorld

- How to Enhance RAG Pipelines with Reasoning Using NVIDIA Llama Nemotron Models | NVIDIA Technical Blog

- The Multimodal RAG Revolution: How NVIDIA’s Llama 3.2 NeMo Retriever Is Redefining Enterprise AI

- Build an Agentic RAG Pipeline with Llama 3.1 and NVIDIA NeMo Retriever NIMs | NVIDIA Technical Blog

- New NVIDIA NeMo Retriever Microservices Boost LLM Accuracy and Throughput | NVIDIA Blog