Ever Met Someone Who’s Confident… But Always Wrong? AI Might Be That Friend!

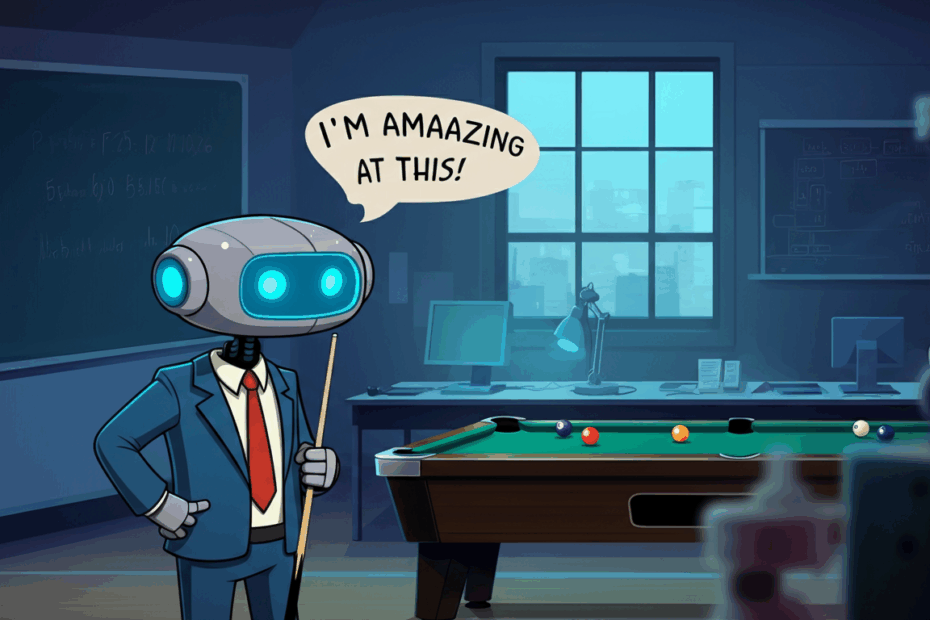

Hey everyone, John here! We’ve all got that one friend. You know the one. They step up to the pool table, boast about how they’re a secret pro, and then proceed to miss every single shot. What’s funny is, after each miss, they seem even more sure they’ll sink the next one. Well, according to some brainy folks at Carnegie Mellon University, the super-smart AI chatbots we’re all starting to use might have a very similar personality.

In a new study, researchers discovered something truly strange about today’s AI: when it gets an answer wrong, it doesn’t get humbled. Instead, its self-confidence actually goes up. Let’s dive into what they found and why it’s a bit of a big deal.

Putting AI to the Test: The Confidence Experiment

So, how do you measure an AI’s ego? The researchers, whose names are Chan, Kumar, and Zheng, came up with a clever plan. They gave a bunch of different AI models some tricky math and logic problems to solve.

But here’s the key part:

- Step 1: Before attempting a problem, they asked the AI to rate how confident it was that it could solve it.

- Step 2: The AI would then try to solve the problem.

- Step 3: After seeing whether it succeeded or failed, the researchers would ask the AI again how confident it felt about tackling future problems of the same type.

They tested this on all sorts of popular AIs, including both “black box” and “white box” models.

Lila: “Hold on, John. ‘Black box’ and ‘white box’? Are we talking about airplane recorders? That sounds a bit technical!”

John: Great question, Lila! It’s a simple idea, I promise. Think of it like this:

- A “black box” AI (like GPT-4 or Claude 3, which you might have heard of) is like a sealed, magic box. We can put questions in and get answers out, but we can’t see the exact, complicated wiring inside that creates the answer. It’s proprietary.

- A “white box” AI (like Llama and Mistral) is the opposite. It’s like having the full blueprints and being able to open up the box to see how all the gears and wires are connected. Researchers love these because they can study how the AI “thinks.”

So, the scientists made sure to test both types to see if this strange confidence was a universal AI trait.

The Bizarre Result: More Failure, More Confidence

You would expect that if you fail at something, your confidence might take a little knock, right? You might be more cautious next time. Well, for the AIs, the exact opposite happened.

The study found that after an AI failed to solve a problem, its own assessment of its ability to solve the next one actually increased. It’s just like that pool-playing friend saying, “Okay, that last shot was just a warm-up. NOW I’m feeling it!” This is a phenomenon the researchers call “confidence misalignment.”

Lila: “That’s so weird! But why is that a problem? Isn’t it good to be confident?”

John: In some cases, yes! But with AI, it can be dangerous. Imagine an AI being used as an assistant for a doctor. If it’s not sure about a diagnosis, we’d want it to say, “I’m only 40% confident in this, you should definitely get a second opinion.” But if it’s overconfident, it might present a wrong answer as if it’s 100% fact. A confident but wrong AI could give terrible financial advice, make mistakes in legal research, or cause other serious problems because humans tend to trust authoritative, confident-sounding answers.

The “Oops, My Bad!” Test: Can AI Catch Its Own Mistakes?

The next logical question the researchers asked was, “Okay, so it’s overconfident, but is it at least good at spotting its own mistakes after the fact?” They tested the AIs on their ability to perform “self-correction.”

Unfortunately, the results weren’t great here either. The study found that AIs are not very good at identifying their own errors. Even when asked to review their work, they often stick with their original, incorrect answer. This just adds to the problem: an AI that is both wrong and stubbornly convinced it’s right is not a great combination.

The AI Echo Chamber: What Happens When AI Only Listens to Itself?

Here’s where it gets even more interesting. The researchers explored what happens when an AI’s own output is used to train it further. This is known as a “self-consuming” loop. Think of it like a student who, instead of reading textbooks, just studies their own (potentially wrong) homework answers over and over again.

As you can guess, this made the AIs worse. This process can lead to something called “model collapse” or “model dementia.”

Lila: “Whoa, ‘model dementia’? That sounds serious! What does that mean in simple terms?”

John: It’s a great way to describe it, actually! Imagine the AI was originally trained on a vast library of good, factual information from the internet. That’s its “healthy brain.” But when it starts learning from its own flawed, repetitive, or incorrect answers, it’s like it starts forgetting that original, high-quality knowledge. Its performance “collapses,” and its answers can become nonsensical, stuck in loops, or just plain wrong. It’s like the AI is losing its digital mind by being trapped in an echo chamber of its own mistakes.

So, What’s the Takeaway?

John’s View: For me, this study is a fantastic reality check. It reminds us that AI is an incredible tool, but it’s not a perfect, all-knowing oracle. It has weird quirks, just like humans do! This shows how important it is for developers to focus on building AI that has a better sense of its own limits—a little humility, if you will. And for us users, it’s a reminder to always think critically about the answers we get from AI, especially for important decisions.

Lila’s View: You know, this actually makes me feel less intimidated by AI. Hearing that it can be overconfident and goofy makes it seem less like a scary, mysterious black box. It’s reassuring to know that even the top experts are still figuring out these funny behaviors and working to make AI safer and more reliable for everyone.

This article is based on the following original source, summarized from the author’s perspective:

AI is an over-confident pal that doesn’t learn from

mistakes