Is Everyone Building AI “Workers” the Wrong Way? A New Company Thinks So.

Hello everyone, John here! It feels like every day there’s a new AI tool that promises to change the world. Many of them are what we call “AI agents.”

(Lila: “John, hang on a second. I’m a total beginner here. What exactly is an AI agent?”)

That’s a perfect question to start with, Lila! Imagine you could hire a tiny, super-fast digital assistant that lives inside your computer. You could tell it, “Hey, book a flight to Hawaii for next Tuesday,” or “Take all the sales data from this spreadsheet and turn it into a presentation.” The AI would then open the right apps, click the buttons, and type in the information all by itself. That digital assistant is an AI agent.

Many big companies are building these agents using the same technology behind tools like ChatGPT. But one expert, who used to work at Google’s famous AI lab DeepMind, says that’s a huge mistake. His name is Ang Li, and his new company, Simular, is doing things completely differently.

Let’s dive into why he thinks most of the world is on the wrong track, and what his team is doing instead.

The Problem: AI That Knows Everything But Understands Nothing

Ang Li points out a major flaw with building AI agents on top of the popular AI of the day: Large Language Models, or LLMs.

(Lila: “Whoa, another technical term! What are LLMs?”)

Great question, Lila. An LLM is an AI that has been trained on a gigantic library of text and books from the internet. This is why they’re so good at chatting, writing emails, and answering questions. ChatGPT is the most famous example. They are masters of language.

But here’s the problem, according to Li. While an LLM can describe how to do a task, it doesn’t really understand the task itself. He gives a fantastic analogy: an LLM is like someone who has read every book ever written about swimming. They can tell you about different strokes, breathing techniques, and water safety. But if you throw them in a pool, they’ll sink because they’ve never actually been in the water.

He says these LLM-based agents are “stateless.”

(Lila: “Stateless? Does that mean they have no country?”)

Haha, not quite! In the computer world, “stateless” means the AI has no memory of what just happened. Each time it performs an action, it’s like it’s starting fresh. Imagine trying to follow a recipe, but every time you add an ingredient, you completely forget all the previous steps you took. You’d keep adding flour over and over! That’s the problem. To do a task on a computer, you need to remember what you clicked, what screen you’re on, and what the goal is. LLMs aren’t naturally built for that kind of step-by-step memory.

The Simular Solution: Learning Like a Human

So, if LLMs aren’t the right tool for the job, what is? Simular’s approach is to teach its AI agents the same way a person learns a new computer program: by watching and practicing.

It’s a fascinating process that works in three main steps:

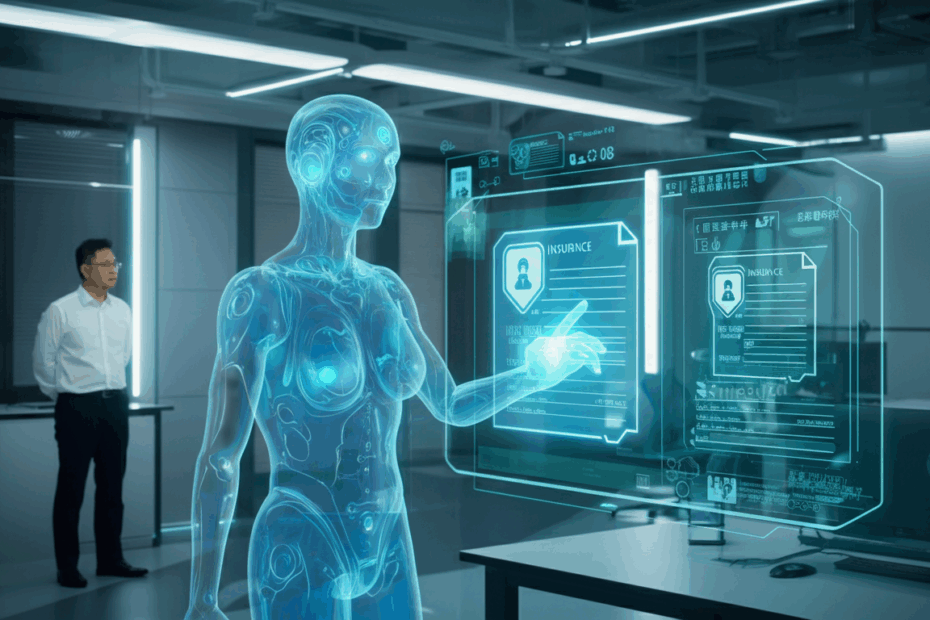

- Observation: First, the Simular agent simply watches a human do a task. For example, it might watch an insurance worker process a claim, which involves clicking through different forms, copying information, and submitting it.

- Mapping: As it watches, the AI doesn’t just record the clicks. It builds a “mental map” of the software’s User Interface, or UI.

- Practice Makes Perfect: This is the most important part. After building its map, the AI starts practicing the task on its own. It tries to fill out the form, click the buttons, and reach the goal. This is where something called Reinforcement Learning comes in.

(Lila: “What’s a User Interface?”)

The UI is just the screen you see and interact with! It’s all the buttons, menus, and text boxes in an application. So, Simular’s AI learns what each button does and how the different screens are connected, creating a map of the program.

The Secret Sauce: Reinforcement Learning

This might sound complicated, but the idea is actually very simple and you’ve probably used it yourself!

(Lila: “I’ve used Reinforcement Learning? How?”)

Think about how you train a dog, Lila. When the dog sits on command, you give it a treat. The “treat” is a positive reward that reinforces the good behavior. The dog learns, “When I do this, I get something good,” so it’s more likely to sit next time.

Reinforcement Learning works the same way for AI. When Simular’s agent performs a step correctly (like entering a name in the right box), the system gives it a small digital “reward.” If it makes a mistake (like clicking the wrong button), it gets no reward or a small penalty. By repeatedly trying the task and aiming for the highest score (the most rewards), the AI slowly but surely masters the process. It learns the “rules” of the software from experience, not just from a textbook.

This way, the agent builds a real, practical understanding. It’s no longer the person who has only read about swimming; it’s the person who has spent hours in the pool, practicing and improving until swimming becomes second nature.

So, Where Is This Technology Being Used?

Simular isn’t trying to build an agent that can do everything at once. They are starting with industries that have a lot of repetitive, form-heavy computer work. Think about industries like:

- Insurance: Processing claims, underwriting policies, and managing customer data involves tons of clicking and data entry across multiple systems.

- Healthcare: Managing patient records, billing, and appointments is another area full of repetitive digital paperwork.

By automating these boring but necessary tasks, Simular’s agents can free up human workers to focus on more important things, like talking to customers or making complex decisions. The goal isn’t to replace people, but to take away the most tedious parts of their jobs.

A Few Final Thoughts

John’s Take: I find this approach really refreshing. For a while now, the AI world has been obsessed with making LLMs bigger and better, trying to make them the solution for every problem. Ang Li’s perspective is a great reminder that the best tool depends on the job. For tasks that require action and consequence, learning by doing seems far more robust than learning by reading. It just makes common sense.

Lila’s Take: As a beginner, this was so much easier to understand! The swimming analogy really clicked for me. It seems obvious now that you can’t expect an AI that’s good with words to be good at doing things. Teaching it like you’d teach a person to learn a new skill makes total sense. I’m excited to see how this helps people in their everyday jobs!

This article is based on the following original source, summarized from the author’s perspective:

Former Google DeepMind engineer behind Simular says other AI

agents are doing it wrong