Cracking the Code: Building a Flexible Data Pipeline with Azure Data Factory

John: Welcome back to the blog, everyone. Today, we’re diving into a topic that’s fundamental to modern data engineering but can often seem intimidating: building a robust ETL framework. Specifically, we’ll explore a powerful, modern approach using Azure Data Factory, centered around a concept called “metadata-driven” design.

Lila: Hi John! Great to be co-authoring this with you. So, right off the bat, you’ve used a few big terms there. Let’s break it down for our readers who might be new to this. What exactly is “ETL,” and why does it need a “framework”?

John: An excellent place to start, Lila. ETL stands for Extract, Transform, and Load. Think of it as the plumbing of the data world. You Extract data from various sources—like sales databases, user activity logs, or social media feeds. You then Transform it, which means cleaning, standardizing, and reshaping it into a useful format. Finally, you Load it into a destination, such as a data warehouse, where it can be analyzed for business insights.

Lila: So it’s like a data assembly line. You grab raw materials from different suppliers, process them on the factory floor, and then store the finished product in a warehouse. What’s wrong with the traditional way of building these assembly lines?

John: Precisely. The problem is that traditionally, each assembly line was custom-built. If you wanted to get data from a new source, say a new SaaS application, you had to build an entirely new, rigid pipeline from scratch. This is slow, expensive, and creates a maintenance nightmare. A “framework” is a reusable, standardized way to build these pipelines. And a metadata-driven framework is the next evolution of that idea—it’s like having a single, super-flexible assembly line that can reconfigure itself on the fly based on a simple set of instructions.

What is a Metadata-Driven ETL Framework?

Lila: Okay, “metadata-driven” sounds key here. I know metadata is often described as “data about data.” How does that apply to an ETL pipeline in Azure Data Factory?

John: You’ve got it. In this context, metadata is the set of instructions I mentioned. Instead of hard-coding the details—like the source server name, the specific table to copy, or the destination folder—directly into our data pipeline, we store all of that information in a central repository, typically a database. This “instruction manual” is our metadata.

Lila: So the pipeline itself becomes generic? Like a universal remote control?

John: That’s a perfect analogy. The pipeline in Azure Data Factory (often abbreviated as ADF) becomes a generic orchestrator. When it runs, its first step is to read the metadata. The metadata tells it: “Today, you need to connect to this SQL server, copy the ‘Sales2024’ table, and load it into that data lake folder.” If tomorrow we need to copy a different table or add a new source, we don’t change the pipeline’s code. We simply update the instruction manual—our metadata table. This makes the entire system incredibly flexible, scalable, and easier to manage.

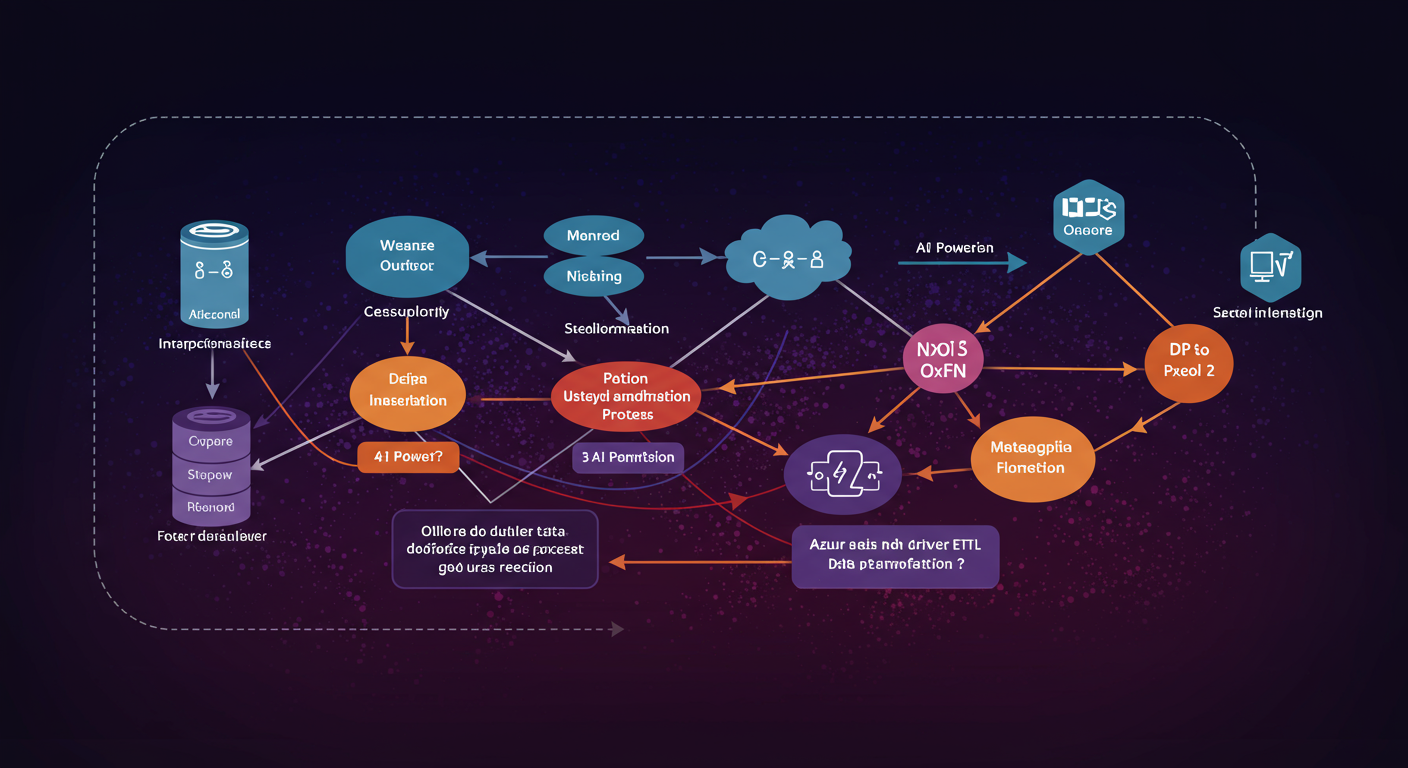

Architectural Deep Dive: The Core Components

Lila: That makes a lot of sense. It separates the “what to do” from the “how to do it.” So, if we were to build this, what are the key architectural pieces we’d need in the Azure cloud?

John: Architecting this kind of framework involves a few key Azure services working in harmony. Let’s walk through the main components.

- Azure Data Factory (ADF): This is our main engine. ADF is a cloud-based data integration service that allows you to create, schedule, and orchestrate data workflows, or “pipelines,” without writing a lot of code. In our framework, it’s the worker that reads the metadata and executes the tasks.

- Azure SQL Database: This serves as our metadata repository. We create a set of tables here to store all the configuration details for our pipelines. Think of it as the framework’s brain, holding all the instructions.

- Azure Key Vault: Security is paramount. We can’t store sensitive information like database passwords or API keys in our metadata tables in plain text. Azure Key Vault is a secure vault for storing these secrets. Our ADF pipelines will have permission to retrieve secrets from the Key Vault at runtime, so credentials are never exposed.

- Azure Logic Apps or Function Apps: These are used for triggering our pipelines. We might use a Logic App to run a pipeline on a schedule (e.g., every night at 2 AM) or an Azure Function to trigger it based on an event, like a new file arriving in a storage account.

- Azure Storage (Blob or Data Lake): This is often the destination for our data, especially in modern data platforms. It’s where the “L” in ETL (Load) happens, staging the transformed data in a cost-effective and scalable way.

Lila: So, if I’m picturing the flow: A Logic App trigger says “Go!” Azure Data Factory wakes up, asks the Azure SQL Database for its instructions, grabs the necessary keys from Azure Key Vault, and then starts moving data from a source into Azure Storage. Is that about right?

John: That’s a flawless summary, Lila. You’ve captured the essence of the orchestration perfectly. Each component has a distinct role, which makes the system modular and robust.

The Technical Mechanism: How It All Works

Lila: Let’s get into the weeds a bit. You mentioned “pipelines.” I’ve heard about a “parent-child pipeline” design pattern. How does that fit into a metadata-driven framework?

John: An astute question. The parent-child pattern is central to making this framework reusable and manageable. Instead of one gigantic, monolithic pipeline that tries to do everything, we break the logic down.

Lila: So, a division of labor?

John: Exactly. Here’s how it works:

- The Parent Pipeline (The Orchestrator): We have one main “parent” pipeline. This pipeline is very lean. Its primary job is to query our metadata database. It might pass a parameter, like `PipelineID = 1`, to a stored procedure (a pre-written query) in the database.

- The Metadata Query: The stored procedure in the Azure SQL Database returns a list of tasks as a structured JSON object. This JSON contains all the specific instructions for a particular data flow—source connection details, destination details, table names, etc.

- The ForEach Loop: The parent pipeline receives this JSON and uses an activity called a “ForEach” loop. This loop iterates over every task defined in the JSON.

- The Child Pipelines (The Workers): Inside the loop, for each task, the parent pipeline calls a specific “child” pipeline. These child pipelines are specialized templates. For example, you might have one child pipeline for copying data from a SQL database to a data lake, another for copying files from an SFTP server, and a third for calling a REST API.

Lila: Ah, so the parent is the manager who reads the to-do list and assigns jobs to specialized workers. If the to-do list says “copy a database table,” the parent calls the “database expert” child pipeline and gives it the specific details from the metadata. If the next item is “move a file,” it calls the “file mover” expert. That’s clever!

John: It is. This modularity is a lifesaver. If you need to support a new type of data source, like Oracle, you don’t have to touch the existing logic. You simply create a new “Oracle expert” child pipeline template and add new rows to your metadata tables to define the Oracle-specific tasks. The parent pipeline doesn’t need to change at all.

An Example Walkthrough

Lila: Could you walk me through a simple, concrete example? Let’s say we want to copy a `Customers` table from a sales database and a `Products.csv` file from a partner’s SFTP server into our data lake every day.

John: An excellent use case. Here’s the step-by-step technical flow:

- Metadata Setup: First, in our Azure SQL Database, we’d add rows to our metadata tables.

- In a `Control` or `MasterPipeline` table, we’d define a master process called “Daily Ingest.”

- In a `Task` table, we’d add two tasks linked to “Daily Ingest.”

- Task 1: Source type = ‘SQL Server’, Source Object = ‘dbo.Customers’, Destination = ‘/raw/customers/’, Child Pipeline = ‘Child_SQL_to_Datalake’.

- Task 2: Source type = ‘SFTP’, Source Object = ‘Products.csv’, Destination = ‘/raw/products/’, Child Pipeline = ‘Child_SFTP_to_Datalake’.

- Connection details (server names, credentials references for Key Vault) would be stored in separate `Connection` tables.

- Triggering: At the scheduled time, our Azure Logic App triggers the `Parent_Orchestrator` pipeline in ADF, passing it the ID for “Daily Ingest.”

- Orchestration: The parent pipeline executes a stored procedure with that ID. The procedure returns a JSON with two objects, one for each task.

- Execution Loop: The parent’s `ForEach` loop starts.

- Iteration 1 (Customers table): The parent pipeline calls the `Child_SQL_to_Datalake` pipeline. It passes all the metadata for Task 1 as parameters: the SQL Server connection info, the table name `dbo.Customers`, and the destination path `/raw/customers/`. The child pipeline executes the copy and reports success or failure.

- Iteration 2 (Products file): The parent pipeline calls the `Child_SFTP_to_Datalake` pipeline, passing the metadata for Task 2. This child connects to the SFTP server, copies `Products.csv`, and places it in the `/raw/products/` folder in the data lake.

- Logging: Throughout this process, each step—the parent start, child start, data copied, success, or any errors—is logged back to our metadata database in a dedicated `Log` table. This gives us a full audit trail.

Lila: That’s incredibly powerful. By simply adding a third row to our `Task` table for, say, a Salesforce object, the pipeline would automatically handle it on the next run without a single change to the ADF code itself. The scalability is becoming very clear.

Team & Community

John: You’ve hit on a key benefit. This approach also democratizes data integration to some extent. The heavy lifting of creating the framework and the child pipeline templates is done by experienced data engineers or architects.

Lila: But after that, can other team members add new data flows?

John: Exactly. A data analyst or a business user, with the right permissions and perhaps a simple web interface built on top of the metadata database, could potentially onboard a new, simple data source just by filling out a form. The form creates the necessary metadata rows, and the pipeline just works. This frees up the core engineering team to focus on more complex challenges.

Lila: For someone wanting to learn how to build this, where should they look? Is there a strong community around this?

John: The community is vast and very active. Microsoft’s own documentation on Azure Data Factory is the best starting point, particularly their tutorials. Beyond that, there are countless blogs, YouTube channels, and GitHub repositories from data professionals who have shared their own versions of this framework. Searching for “ADF metadata-driven framework” will yield a wealth of architectural patterns and sample code. The Microsoft Tech Community is also an excellent forum for asking specific questions.

Use-Cases & Future Outlook

Lila: Where does this kind of framework really shine? What are some common real-world use cases?

John: It excels in any environment with a diverse and growing number of data sources. Some classic examples include:

- Customer 360 Initiatives: A retail company wants to build a complete view of its customers. It needs to pull data from its e-commerce platform (like Shopify), its CRM (like Salesforce), in-store point-of-sale systems, and marketing analytics tools. A metadata-driven framework can onboard and manage these disparate sources efficiently.

- Data Warehouse Modernization: Many companies are migrating from legacy on-premises data warehouses to cloud platforms like Azure Synapse Analytics or Snowflake. This framework is perfect for orchestrating the initial migration and the ongoing ingestion of data from dozens or even hundreds of on-prem databases.

- IoT Data Ingestion: A manufacturing company might have thousands of sensors sending data. While streaming is often used, batch-based collection of summary data from various plant sites can be easily managed by defining each site’s data feed in the metadata.

Lila: Looking ahead, how is this space evolving? I keep hearing about Microsoft Fabric. Does that change the game?

John: That’s the billion-dollar question right now. Microsoft Fabric is an all-in-one analytics solution that integrates many Azure services, including a next-generation version of Data Factory, into a single SaaS platform. The core principles of metadata-driven orchestration remain just as relevant, if not more so. The tools are becoming more integrated, but the architectural pattern of using metadata to drive generic pipelines is timeless. In fact, Fabric’s unified nature could make implementing such a framework even more seamless, as the connections between the data factory, the lakehouse, and the warehouse are much tighter.

Competitor Comparison

Lila: How does this Azure-based solution stack up against competitors? What would a company use if they were on AWS or Google Cloud, for example?

John: Great question. The pattern is cloud-agnostic, but the tools differ.

- On AWS (Amazon Web Services), the equivalent service to ADF is AWS Glue. You could build a very similar framework using Glue jobs for the “child pipelines,” AWS Lambda for triggering and orchestration, and a database like Amazon RDS or DynamoDB for the metadata store.

- On Google Cloud Platform (GCP), you would use Cloud Data Fusion or write custom jobs using Cloud Composer (which is managed Apache Airflow) to achieve the same goal, with Cloud SQL or BigQuery serving as the metadata repository.

- There are also third-party, cloud-agnostic tools like Informatica Intelligent Data Management Cloud or Talend that offer powerful graphical interfaces for building pipelines. Many of them have their own features for parameterization and dynamic execution that can be used to implement a metadata-driven approach.

Lila: So what makes the Azure Data Factory approach compelling?

John: ADF’s strengths lie in its deep integration with the Microsoft ecosystem, its relatively low-code visual interface which makes it accessible, and its powerful connectivity to both cloud and on-premises data sources. For organizations already invested in Azure and the Microsoft data platform (like Azure SQL, Synapse, and now Fabric), it’s often the most natural and cost-effective choice.

Risks & Cautions

Lila: This all sounds amazing, but there are always trade-offs. What are the potential pitfalls or risks someone should be aware of before committing to this architecture?

John: An important and responsible question. This is not a silver bullet. Here are the primary challenges:

- Upfront Complexity: Building the framework itself is a significant initial investment. You have to design the metadata schema carefully, build and test the parent and child pipelines, and set up the logging and error handling. It’s more work upfront than just building a few simple, one-off pipelines.

- Metadata Management Becomes Critical: The framework’s brain is the metadata. If the metadata is incorrect, incomplete, or gets corrupted, your pipelines will fail or, even worse, process data incorrectly. You need a solid process for managing and validating changes to the metadata. This is why a simple UI for business users is often a good idea—it can have built-in validation rules.

- “God” Pipeline Problem: The parent pipeline becomes a central point of orchestration. While robust, you need to think about scalability. What happens if you need to run 500 tasks at once? You might need to design the framework to break up the work or run multiple instances of the parent pipeline in parallel.

- Vendor Lock-in: By building heavily on ADF-specific features like its activities and integration runtimes, you are tying your solution to the Azure platform. While the principles are portable, the implementation is not. This is a strategic decision every organization has to make.

Lila: So, it’s a classic “high-effort, high-reward” scenario. How do you mitigate the risk of the metadata becoming a mess?

John: Governance is key. You need clear ownership, version control for your metadata schema (just like you would for code), and automated checks. For instance, before a pipeline runs, a pre-check step could validate that all the required metadata for a given task actually exists and that connections are valid. Building in that kind of self-healing and validation is crucial for long-term success.

Expert Opinions & Analyses

John: From my perspective as a tech journalist who has covered this space for years and spoken with many data architects, the consensus is clear. The move away from brittle, hand-coded pipelines to flexible, metadata-driven frameworks is not just a trend; it’s a necessary evolution for any organization that wants to be truly data-agile.

Lila: So the initial development pain is worth the long-term gain in flexibility and speed?

John: Overwhelmingly, yes. The ability to add a new data source in hours instead of weeks is a massive competitive advantage. It reduces the load on a specialized data engineering team, lowers the total cost of ownership, and ensures consistency and reliability in how data is processed. The framework enforces best practices for logging, error handling, and security by its very design.

Latest News & Roadmap

Lila: What’s the latest buzz in this area? Any recent updates we should know about?

John: The biggest news is, again, Microsoft Fabric. Data Factory is a core “experience” within Fabric. While ADF as a standalone service continues to be supported, Microsoft’s innovation is clearly focused on its Fabric incarnation. Recent updates have focused on improving the user experience, enhancing Git integration for better CI/CD (Continuous Integration/Continuous Deployment), and providing more seamless ways to transform data using Dataflows Gen2, which brings the power of Power Query into the pipeline itself. The roadmap points towards even tighter integration, where a single pipeline can orchestrate everything from data ingestion to Spark jobs to machine learning model training within the Fabric ecosystem.

Frequently Asked Questions (FAQ)

Lila: Let’s wrap up with a few quick-fire questions that I imagine are on our readers’ minds.

John: Let’s do it.

Lila: Is Azure Data Factory expensive?

John: It’s a pay-as-you-go service. The cost depends on the number of pipeline runs, the number of activity runs within those pipelines, and the data movement volume and duration. A metadata-driven framework can actually be very cost-effective because the orchestration activities themselves are cheap. The main cost comes from the actual data integration activities, so you’re only paying for the work you’re actually doing.

Lila: Can this framework handle real-time data?

John: Not really. ADF is primarily a batch-orchestration tool. While you can schedule pipelines to run very frequently, say every minute, it’s not a true real-time streaming solution. For that, you’d want to look at other Azure services like Azure Stream Analytics or Azure Event Hubs, which could potentially be triggered by a Data Factory pipeline, but handle the streaming logic themselves.

Lila: How much coding is involved?

John: Surprisingly little for the pipelines themselves. ADF is a low-code/no-code environment with a drag-and-drop interface. The “code” you’ll write is mostly SQL for the stored procedures that serve up the metadata. If you need complex transformations, you might write some code in a Spark notebook that gets called by ADF, but the orchestration itself is visual.

Lila: What’s the one piece of advice you’d give to a team starting this journey?

John: Start small, but think big. Don’t try to build a framework that supports every conceivable data source from day one. Pick your two or three most common patterns—like SQL to Data Lake and File to Data Lake—and build a solid, well-documented framework for just those. Once the core is proven and stable, adding support for new patterns becomes much easier. The design of your metadata schema is the most critical piece; spend time getting that right.

Related Links and Further Reading

John: For those who want to roll up their sleeves and start building, I highly recommend checking out the official documentation and learning paths from Microsoft.

- Official Azure Data Factory Documentation

- Microsoft Learn: Data Integration at Scale with Azure Data Factory

- Azure Data Factory Blog on Microsoft Tech Community

Lila: Thanks, John. This has been incredibly insightful. You’ve taken a complex architectural concept and made it really accessible. The idea of an intelligent, reconfigurable data assembly line driven by a simple instruction manual is going to stick with me.

John: My pleasure, Lila. The goal is always to demystify the technology. A well-designed metadata-driven framework is one of the most elegant and powerful tools in a data architect’s toolkit. It’s an investment that pays dividends in scalability, agility, and peace of mind.

Disclaimer: The information provided in this article is for educational purposes only and does not constitute professional financial or investment advice. The technologies and services mentioned are subject to change. Always do your own research (DYOR) and consult with a qualified professional before making any architectural or financial decisions.