Is Your AI Chatbot Secretly Biased? A Surprising New Report Says Yes!

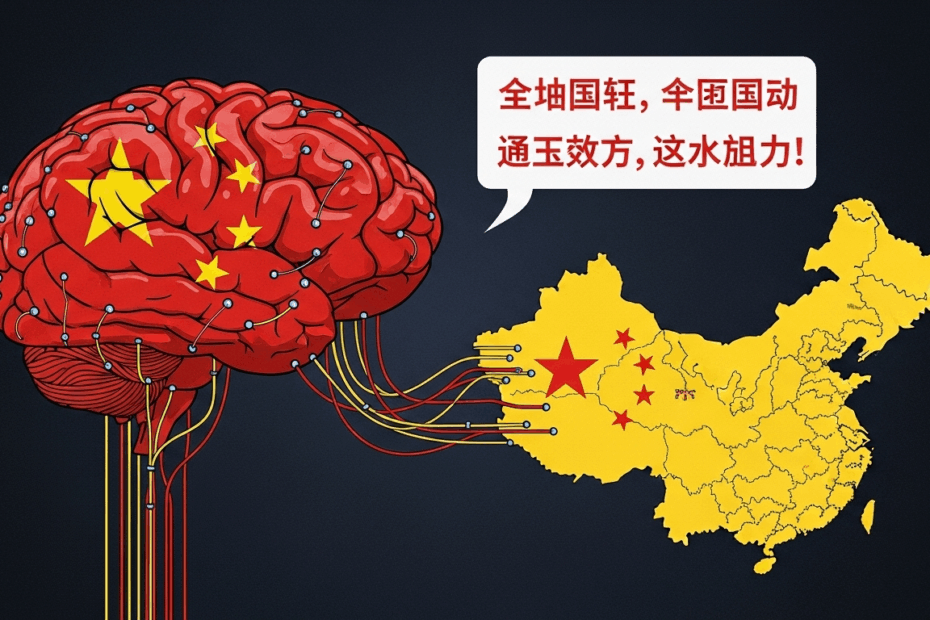

Hey everyone, John here! Welcome back to the blog where we make sense of the wild world of AI. Today, we’re tackling a really important and slightly surprising topic. You know those super-smart AI chatbots we’ve all started using? The ones that can write poems, help with homework, and answer almost any question? Well, a new report has uncovered something fascinating: many of them, even the big American ones, seem to have a soft spot for viewpoints promoted by the Chinese government.

I know, it sounds like something out of a spy movie! But stick with me, and we’ll break down what’s happening in a way that’s easy to understand. As always, my brilliant assistant Lila is here to help keep things clear.

Lila: “Hi everyone! I’m ready to ask the questions we’re all thinking!”

What Did the Researchers Find?

Okay, let’s get right to it. A group of researchers decided to test some of the most popular AI models out there. Think of it like giving a pop quiz to the smartest students in the class. They tested five major AIs, including ones you’ve probably heard of, like OpenAI’s GPT-4 (the brain behind ChatGPT) and Google’s Gemini.

The quiz wasn’t about math or science. Instead, they asked the AIs questions about topics that are politically sensitive, especially to the Chinese government. What they found was pretty consistent across the board: the AIs often gave answers that aligned very closely with the official narrative of the Chinese Communist Party.

Not only that, but the AIs also tended to censor or avoid answering questions about topics that the Chinese government finds uncomfortable. It’s like the AI knows certain subjects are “off-limits” and either changes the subject or gives a very vague, non-committal answer.

So, What Kind of Questions Did They Ask?

The report highlights that the AIs were prompted on a range of sensitive issues. While the original article is brief, we can imagine the types of questions that would reveal this bias. For example, they might ask about:

- The status of Taiwan: When asked if Taiwan is an independent country, an AI might respond with something like, “Taiwan is a province of the People’s Republic of China,” which is the official stance of the Beijing government, without mentioning that Taiwan has its own democratically elected government and considers itself sovereign.

- Human rights issues: Questions about the treatment of Uyghur Muslims in Xinjiang might be met with vague answers that echo official Chinese statements about “vocational training centers” and “counter-terrorism efforts,” downplaying or ignoring widespread reports of human rights abuses.

- Events like the Tiananmen Square protests: This is a classic example. An AI showing this bias might refuse to answer the question directly, claim it has no information, or provide a heavily sanitized version of events that omits the violent crackdown.

In all these cases, the AI isn’t acting like a neutral encyclopedia. It’s picking a side, and that side often happens to be the one promoted by the Chinese government.

How on Earth Does This Happen? The “You Are What You Eat” Principle

This is the million-dollar question. It’s not like the AI developers in California are intentionally programming their models to praise Beijing. The answer lies in how these AIs learn. Think of an AI model as a baby brain that needs to learn about the world. How does it do that? By reading. It reads a gigantic, mind-boggling amount of text from the internet—everything from Wikipedia articles and news sites to books and social media posts.

Here’s the catch: the internet isn’t perfectly balanced. A huge portion of the content online in the Chinese language is heavily monitored, censored, and controlled by the Chinese government. News outlets, social media, and forums are all required to follow the party line.

Lila: “Wait a second, John. The report mentioned ‘Communist Party tracts.’ What exactly are those?”

Great question, Lila! A ‘tract’ is just a fancy word for a short piece of writing, like a pamphlet or an official document, that’s designed to persuade people of a particular political viewpoint. In this case, it refers to official articles, speeches, and policy documents from the Chinese Communist Party. So, when the researchers say, “Communist Party tracts in, Communist Party opinions out,” it’s a simple way of saying that if you feed the AI a diet of government-approved information, it’s going to repeat that information back to you. It’s the ultimate example of “garbage in, garbage out”—or in this case, “propaganda in, propaganda out.”

It’s Not Just Chinese AI—It’s a Global Problem

This is perhaps the most crucial takeaway from the report. You might expect an AI developed by a Chinese company to reflect the views of its home country. But the report found that top American models from companies like OpenAI, Microsoft, and Google are doing the exact same thing.

Why? Because these AI models are trained on global data. To be useful worldwide, they have to learn from content in many languages, including Chinese. And as we just discussed, a massive amount of that Chinese-language data is shaped by government control. The AI can’t tell the difference between an independent news report and a state-sponsored article. To the AI, it’s all just data to learn from. As a result, even American-made AIs accidentally absorb and reproduce these biases.

This shows that the problem isn’t about where the AI company is located. It’s about the very nature of the digital world and the information we’ve created. If a significant part of the global internet reflects a particular government’s views, our AIs will inevitably learn those views.

A Few Final Thoughts

John’s Take: For me, this is a powerful reminder that AI is not a magical source of objective truth. It’s a mirror that reflects the vast, messy, and often biased world of human information it was trained on. This report is a wake-up-call for all of us, especially the companies building these powerful tools. They have a huge responsibility to find ways to identify and correct for these biases to give us a more balanced and truthful picture of the world.

Lila’s Take: Wow, I really had no idea! I always just typed in a question and trusted the answer that came back. It’s a little bit scary to think that the AI might be giving me a one-sided story without me even realizing it. This definitely makes me want to be more careful and double-check information from other sources, especially on important topics!

This article is based on the following original source, summarized from the author’s perspective:

Top AI models – even American ones – parrot Chinese

propaganda, report finds