Scary: ChatGPT is being exploited for cybercrime, from fake resumes to aiding cyber-ops. What can we do? #ChatGPT #Cybersecurity #AI

Explanation in video

Hello, Everyone! John Here, with a Big Topic!

You know how we often talk about how amazing Artificial Intelligence, or AI, is? It’s like having a super-smart helper that can write stories, answer questions, and even help with homework. We often focus on the cool things it can do, but just like any powerful tool, there’s always a risk that some folks might try to use it for not-so-good things.

Today, we’re diving into a recent report from OpenAI, the company behind ChatGPT. They’ve shared some important news about how certain groups are trying to use their AI for malicious purposes. Don’t worry, we’ll break it down into super easy-to-understand chunks!

OpenAI Fights Back: Kicking Out the Bad Guys

So, the big news from OpenAI is that they’ve identified and shut down accounts linked to 10 different “malicious campaigns.”

Lila: Hold on, John! What exactly does “malicious campaigns” mean? Sounds a bit scary!

John: Good question, Lila! Think of a “malicious campaign” as a planned series of bad actions. Like when a group of people tries to trick others, spread false information, or break into computer systems. So, when OpenAI says they shut down accounts linked to these, it means they stopped people who were trying to use ChatGPT for these harmful plans.

OpenAI basically acted like a digital bouncer, identifying the troublemakers and kicking them out of the club (which is their AI platform).

Who’s Using AI for Trouble? The “Baddies” List

OpenAI’s report identified several groups from different parts of the world who were attempting to misuse ChatGPT. They’re not just random individuals; some are even linked to governments!

- Groups from North Korea: These folks were reportedly trying to create fake resumes for IT workers. Imagine trying to get a job at a company by pretending to be someone you’re not, just to get inside their systems!

- Groups linked to China: These are often called “Beijing-backed cyber operatives.”

- Groups from Russia: These are often called “malware slingers.” They were reportedly using ChatGPT to create fake emails (called “phishing emails”) and simple computer code for their attacks. They also used it to find public information about their targets.

- Groups from Iran: Similar to the Russian groups, they were also generating phishing emails and using social engineering tricks (which means manipulating people into giving up information) with AI’s help. They also used it for research to find out more about their targets.

Lila: “Cyber operatives”? What are those, like secret agents for the internet?

John: That’s a fun way to think about it, Lila! “Cyber operatives” are basically individuals or groups who work, often secretly and with government backing, to perform actions in the digital world. This can include spying on other countries, stealing information, or even disrupting computer networks. They’re like digital spies or special forces.

These Chinese groups were using ChatGPT for things like researching targets, generating computer code, fixing errors in their harmful programs (we call that “debugging”), and creating enticing messages to trick people.

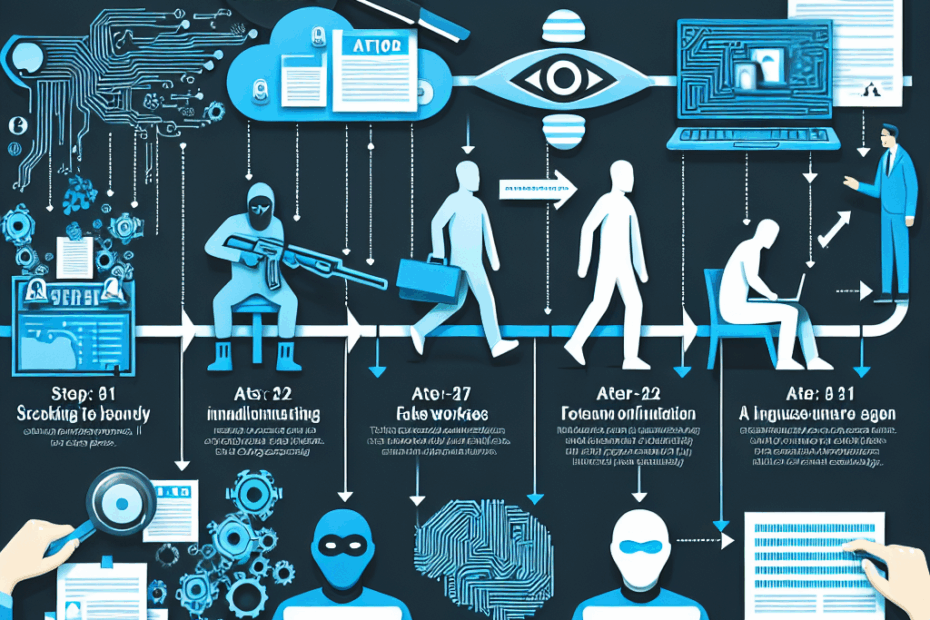

How Are They Using ChatGPT for “Evil”?

It’s important to understand that ChatGPT isn’t suddenly becoming a super-hacker that writes harmful computer viruses from scratch. Instead, these bad actors are using it as a super-smart assistant to help them with various stages of their harmful plans.

Here are some of the ways they’ve been trying to use AI:

- Making Fake Resumes:

This is a particularly sneaky one. The North Korean groups were allegedly using AI to generate realistic-looking resumes for IT jobs. They’d then try to get hired by companies, and once inside, they could potentially steal information or disrupt systems. It’s like using a really good fake ID to get past security.

- Spreading Lies and Misinformation:

AI is great at writing text, and unfortunately, this can be misused to create believable-sounding articles or social media posts that are actually completely false. This is called “misinformation” or “propaganda,” and it can be used to mislead people or cause confusion.

- Helping with Cyberattacks (Cyber-op Assist):

This covers a wide range of activities. The bad guys are using AI to:

- Do their Homework (Reconnaissance): AI can quickly search for information about a target – like finding out which companies they work with, their technical setup, or even personal details, which can then be used in an attack.

- Write Simple Code: While AI isn’t creating complex viruses, it can help generate small bits of code or scripts that are useful in an attack, or even help fix errors in their existing harmful programs.

- Create “Lures”: This means crafting really convincing messages or fake websites designed to trick someone into clicking a link, downloading a file, or giving up personal information.

- Generate Phishing Emails: This is a big one.

Lila: Oh, I know this one! Is it when you get emails that look like they’re from your bank or a famous company, but they’re really trying to steal your password?

John: Exactly, Lila! You’ve got it. “Phishing emails” are deceptive emails designed to trick you into revealing sensitive information, like passwords or credit card numbers, or to download harmful software. They often look very legitimate, mimicking real organizations. AI makes it easier for bad actors to write these convincing fakes quickly and in many different languages.

So, AI isn’t the mastermind behind the attack, but more like a very efficient helper, making it easier and faster for these groups to carry out their plans.

How Does OpenAI Stop Them?

OpenAI isn’t just sitting back. They’re actively working to detect and prevent misuse of their AI tools. They do this by:

- Looking for Patterns: They analyze how accounts are used, looking for “distinctive characteristics and behaviors” that indicate malicious activity. Think of it like a security guard watching for suspicious movements.

- Working with Partners: They’re not alone in this fight. OpenAI is working with other cybersecurity companies, researchers, and even governments to share information and intelligence about these threats. This teamwork is crucial!

Why This Report Matters

This is a significant step because it’s the first time OpenAI has publicly shared such detailed information about specific state-affiliated groups trying to misuse their AI. It shows they are committed to transparency and to fighting against the misuse of their technology for harmful purposes.

John’s and Lila’s Thoughts

John: Well, that was a lot to take in, wasn’t it? For me, this report is a stark reminder that as AI technology gets more powerful, so does the responsibility to use it wisely and to protect against its misuse. It’s like having a super-advanced workshop; you need good security measures and rules to ensure only good things are built there. It shows that the “good guys” in tech are always working hard to stay one step ahead of the “bad guys.”

Lila: At first, I felt a little scared thinking about AI being used for bad things! But then hearing how OpenAI is actively stopping them, and working with other companies and even governments, makes me feel a lot better. It’s like a big team of superheroes fighting against the cyber villains!

That’s all for today, folks! Stay curious, stay safe online, and we’ll chat again soon!

This article is based on the following original source, summarized from the author’s perspective:

ChatGPT used for evil: Fake IT worker resumes, misinfo, and

cyber-op assist