Are AWS AI Factories a game-changer or a trap? Uncover the real costs, lock-in risks, and ROI before you commit. Get the engineering truth.#AWSAIFactories #AIInfrastructure #VendorLockin

Quick Video Breakdown: This Blog Article

This video clearly explains this blog article.

Even if you don’t have time to read the text, you can quickly grasp the key points through this video. Please check it out!

If you find this video helpful, please follow the YouTube channel “AIMindUpdate,” which delivers daily AI news.

https://www.youtube.com/@AIMindUpdate

Read this article in your native language (10+ supported) 👉

[Read in your language]

AWS AI Factories: Cutting Through the Hype to Real Business Value

🎯 Level: Business Leader

👍 Recommended For: CTOs navigating AI infrastructure decisions, IT Managers optimizing enterprise workflows, AI Strategists evaluating vendor lock-in risks

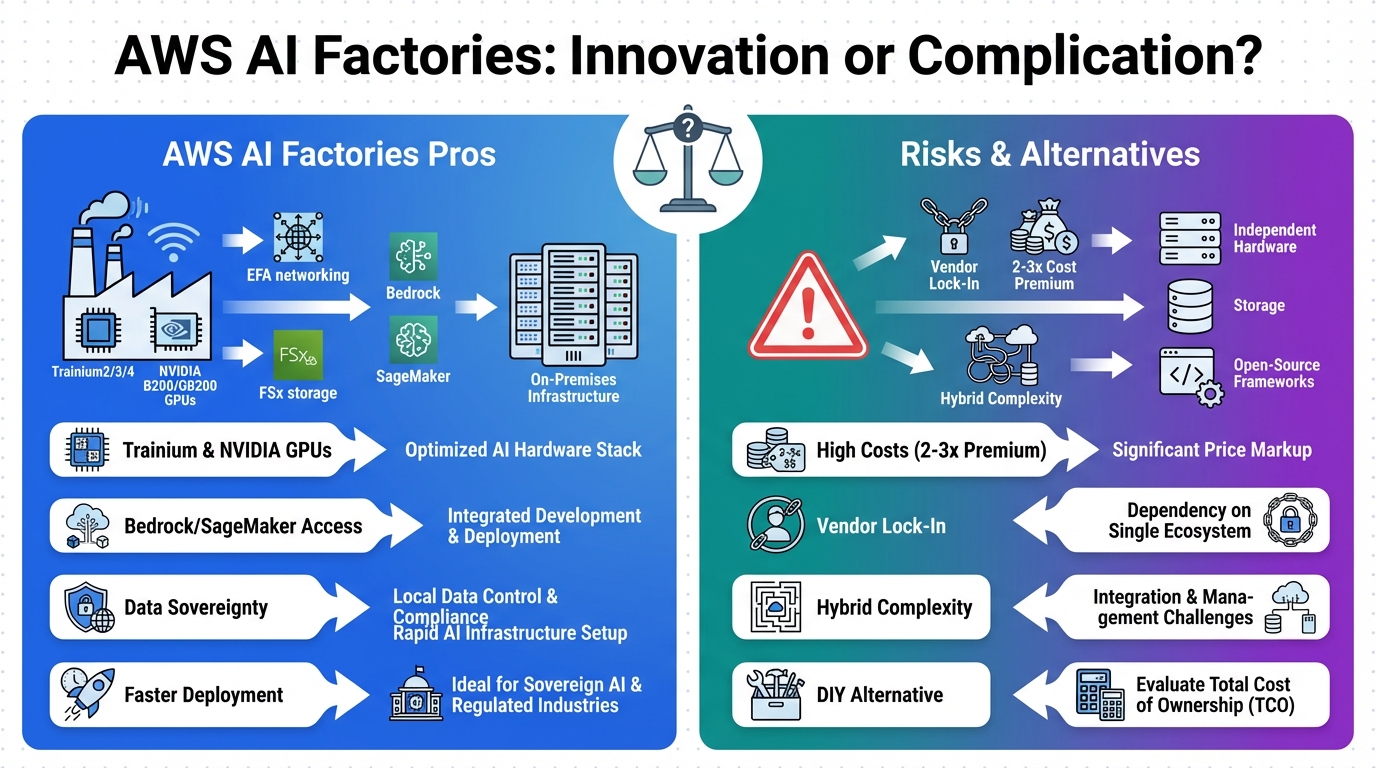

In today’s fast-paced enterprise landscape, the rush to adopt AI often collides with a harsh reality: skyrocketing infrastructure costs and vendor dependencies that stifle agility. AWS’s latest offering, AI Factories, promises to transform data centers into AI powerhouses, but as a recent InfoWorld analysis points out, it might just complicate your stack with higher costs and lock-in. As John, I’ve seen enough hype cycles to know when to roast the buzzwords and dig into the engineering truth. Joining me is Lila, our bridge for those new to the tech trenches, ensuring we break it down without leaving anyone behind.

John: Let’s be real—AWS AI Factories sound like the next big thing, a collaboration with NVIDIA to turn your existing setup into a high-performance AI environment. But the InfoWorld piece titled “AWS AI Factories: Innovation or complication?” lays it out starkly: adopting this could mean higher costs, vendor lock-in, and added complexity. Why? Because it’s essentially a pre-packaged solution that might not fit your unique needs. Enterprises are already grappling with multicloud resistance, inefficient code bloating budgets, and the hidden costs of scaling AI. This isn’t just tech talk; it’s a business bottleneck that’s draining ROIs left and right.

Lila: If you’re a business leader dipping your toes into AI, think of it like this: Imagine your company as a bustling factory. Traditional AI setups are like outdated assembly lines—slow, expensive to maintain, and prone to breakdowns. AWS AI Factories aim to modernize that, but as John says, it might just add more gears that don’t mesh well with what you already have.

The “Before” State: Traditional AI Infrastructure Pain Points

Before diving into AWS’s offering, let’s contrast it with the old ways. Historically, enterprises built AI systems piecemeal: cobbling together cloud instances, on-premises servers, and third-party tools. This led to fragmented data silos, where sensitive information couldn’t move freely due to sovereignty concerns, and compute power was often underutilized. Costs ballooned—think billions wasted on inefficient code, as noted in recent vendor analyses. Integration was a nightmare, with teams spending months on custom scripting just to get models running. The result? Delayed deployments, compliance headaches, and AI projects that promised the moon but delivered dust.

John: I’ve battled these in the trenches. Take a typical Fortune 500 firm: They’re using legacy data centers that can’t handle the GPU demands of modern LLMs like Llama-3-70B. Without optimization, you’re looking at latency spikes and energy bills that could fund a small startup. AWS AI Factories claim to fix this by integrating NVIDIA’s tech for rapid AI app deployment, but the InfoWorld critique warns of the trap: It’s innovative on paper, but in practice, it locks you into AWS’s ecosystem, potentially hiking long-term ROI risks.

Lila: For beginners, picture the “before” as cooking a gourmet meal in a tiny kitchen with mismatched tools. Everything takes forever, and half the ingredients go to waste. The new approach? A professional kitchen setup—but only if it doesn’t force you to buy everything from one supplier.

Core Mechanism: Structured Reasoning Behind AWS AI Factories

At its core, AWS AI Factories leverage a combination of AWS’s cloud infrastructure and NVIDIA’s GPUs to create bespoke AI environments. Executive summary: It’s about scaling AI while respecting data sovereignty—keeping sensitive data on-premises or in compliant regions. Structured reasoning breaks it down like this: First, assess your existing infrastructure; second, integrate Trainium chips or Graviton processors for efficient compute; third, deploy via tools like Amazon Bedrock for agentic workflows. But here’s the engineering reality: While it promises speed (rapid prototyping) and cost savings through optimization, the complication arises from dependency. Building your own with open-source alternatives, like using Hugging Face Transformers for model fine-tuning on self-managed Kubernetes clusters, often yields better control and lower lock-in.

John: Let’s get specific—no gatekeeping here. Quantization (shrinking models for efficiency) via libraries like BitsAndBytes can cut costs without AWS’s proprietary stack. Pair that with vLLM for inference serving, and you’ve got a flexible setup that avoids the “complication” trap InfoWorld highlights.

Lila: Analogy time: It’s like upgrading from a bicycle to a car. AWS provides the car, but building your own (with parts from various makers) might give you a custom ride that’s cheaper to maintain.

Use Cases: Practical Value in Action

To illustrate, here are three concrete scenarios where AWS AI Factories could shine—or stumble—based on real-world applications.

- Financial Services Optimization: A bank needs to process transaction data for fraud detection without breaching data sovereignty. AWS AI Factories enable on-premises NVIDIA clusters integrated with AWS, speeding up models like fine-tuned BERT variants. Benefit: ROI boosts from reduced false positives, but watch for lock-in if expanding to multicloud.

- Healthcare AI Scaling: A hospital chain deploys AI for patient diagnostics. Using AI Factories, they transform existing servers into high-performance environments, respecting HIPAA. Real value: Faster insights, but as per InfoWorld, custom builds with open tools like LangChain for RAG (Retrieval-Augmented Generation—pulling relevant data into models) might offer more flexibility.

- Manufacturing Predictive Maintenance: An auto manufacturer predicts equipment failures. AI Factories integrate with IoT data for real-time analysis, promising cost savings. However, opting for self-built stacks with TensorFlow on edge devices could avoid complexity.

John: These aren’t hypotheticals; they’re drawn from trends like those in Forrester’s re:Invent coverage, where AWS pushes AI-native clouds but enterprises demand measurable value.

Comparison Table: Old Method vs. New Solution

| Aspect | Old Method (Traditional AI Setup) | New Solution (AWS AI Factories or Custom Build) |

|---|---|---|

| Cost Efficiency | High overhead from inefficient code and siloed resources | Lower costs via optimized compute, but potential lock-in hikes long-term expenses |

| Scalability | Limited by hardware constraints and manual integrations | High scalability with NVIDIA integration, ideal for rapid deployment |

| Complexity & Lock-in | Fragmented but flexible | Streamlined yet vendor-dependent; custom alternatives reduce risks |

| ROI Timeline | Slow, with months to value | Faster ROI, but evaluate against build-your-own for sustainability |

Conclusion: Key Insights and Next Steps

In summary, AWS AI Factories represent genuine innovation in scaling AI infrastructure, especially for enterprises needing quick wins in compute power and data handling. However, as the InfoWorld analysis astutely points out, the complication factor—costs, lock-in, and complexity—makes a strong case for building your own architecture. The key mindset shift? Prioritize flexibility over convenience. Next steps: Audit your current setup, experiment with open-source tools like Hugging Face for prototypes, and consult multicloud strategies from reports like Forrester’s. By doing so, you’ll unlock true ROI without the vendor traps.

John: Roast the hype, respect the tech— that’s how we turn complexity into opportunity.

Lila: And remember, start small; scale smart.

👨💻 Author: SnowJon (Web3 & AI Practitioner / Investor)

A researcher who leverages knowledge gained from the University of Tokyo Blockchain Innovation Program to share practical insights on Web3 and AI technologies.

His core focus is translating complex technologies into forms that anyone can understand and apply, combining academic grounding with real-world experimentation.

*This article utilizes AI for drafting and structuring, but all technical verification and final editing are performed by the human author.

▼ AI Tools for Creators & Research (Free Plans Available)

- 🔍 Free AI Search Engine & Fact-Checking

👉 Genspark - 📊 Create Slides & Presentations Instantly (Free to Try)

👉 Gamma - 🎥 Turn Articles into Viral Shorts (Free Trial)

👉 Revid.ai - 🗣️ Generate Explainer Videos without a Face (Free Creation)

👉 Nolang - ⚙️ Automate Your Workflows (Start with Free Plan)

👉 Make.com

▼ Access to Web3 Technology (Infrastructure)

- Setup your account for Web3 services

👉 Global Crypto Exchange Guide (Free Sign-up)

*This description contains affiliate links.

*Free plans and features are subject to change. Please check official websites.

*Please use these tools at your own discretion.

References & Further Reading

- AWS AI Factories: Innovation or complication? | InfoWorld

- New AWS AI Factories transform customers’ existing infrastructure into high-performance AI environments

- What We Saw At AWS re:Invent 2025

- Introducing AWS AI Factories – AWS