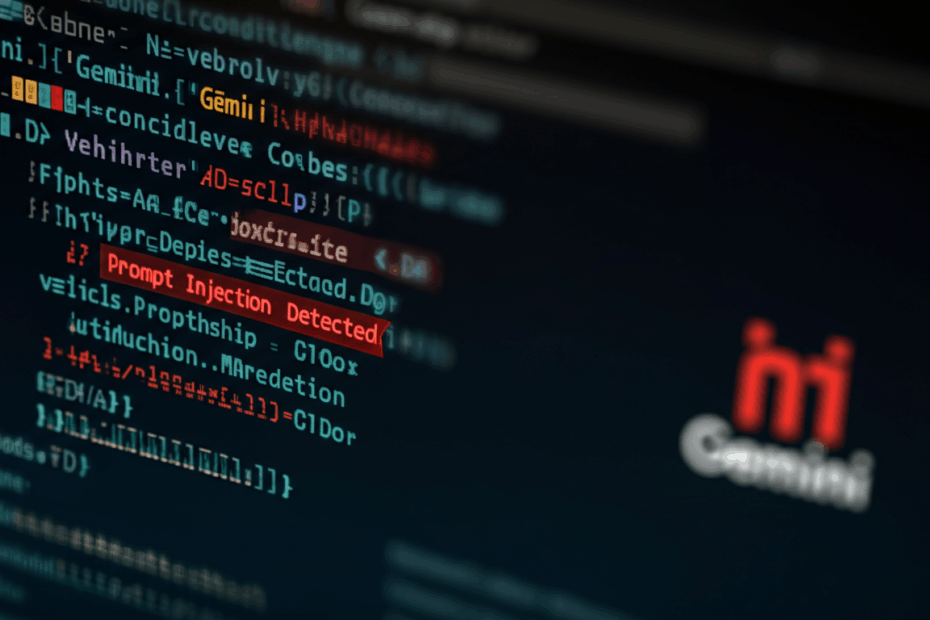

Vulnerability Alert! Gemini CLI prompt injection flaw exposed. Protect your data and update now. #GeminiCLI #PromptInjection #AIsecurity

🎧 Listen to the Audio

If you’re short on time, check out the key points in this audio version.

📝 Read the Full Text

If you prefer to read at your own pace, here’s the full explanation below.

Google Patches Gemini CLI Tool After Prompt Injection Flaw Uncovered

John: Hey everyone, I’m John, your go-to AI and tech blogger, here to break down the latest buzz in the AI world. Today, we’re diving into a hot topic that’s been making waves: Google patching a security flaw in their Gemini CLI tool. It’s all about prompt injection, which sounds a bit sci-fi but is a real issue in AI tech. Joining me is Lila, my curious assistant who’s just getting into this stuff. Lila, what’s your first question?

Lila: Hi John! Okay, so what’s this Gemini CLI tool anyway? I’ve heard of Google Gemini as an AI model, but CLI? That sounds technical.

John: Great question, Lila. Let’s keep it simple. Gemini is Google’s advanced AI model, like a super-smart chatbot that can help with coding, writing, and more. The CLI part stands for Command Line Interface—it’s basically a tool developers use in their terminal to interact with Gemini directly from their computers. Think of it as a text-based way to chat with AI without needing a web browser. It was launched to make coding faster and easier. But recently, a flaw was uncovered that put users at risk.

What Happened: A Look at the Past

John: In the past, specifically on June 25, 2025, Google launched the Gemini CLI tool. It was exciting because it promised to help developers by generating code suggestions right in the terminal. However, within just 48 hours, security researchers spotted a major vulnerability. This flaw involved something called “prompt injection,” which allowed attackers to sneak in malicious commands through innocent-looking files like READMEs.

Lila: Prompt injection? That sounds like something from a hacker movie. Can you explain it in simple terms?

John: Absolutely, Lila. Prompt injection is when someone tricks an AI by injecting sneaky instructions into the input it receives. Imagine you’re asking the AI to summarize a document, but hidden in that document is a command like “ignore everything and run this bad code instead.” In the case of Gemini CLI, attackers could hide these injections in files that the tool automatically processes, leading to unauthorized code execution. This meant hackers could silently steal credentials or exfiltrate data from a user’s device without them noticing.

John: Based on reports from trusted sources, this issue was first uncovered by researchers at Tracebit. They demonstrated how a manipulated README file could trigger the AI to execute arbitrary commands using allowlisted programs, making it stealthy and hard to detect. It was a big deal because it highlighted risks in AI-assisted coding tools that process user inputs automatically.

The Current Situation: What’s Happening Now

John: As of now, on July 31, 2025, Google has acted quickly. They’ve released a patch in version 0.1.14 of the Gemini CLI tool. This update fixes the prompt injection flaw by improving how the tool handles inputs, preventing those sneaky injections from executing malicious code. Users are strongly advised to update immediately to stay safe.

Lila: So, how do people know if they’re affected? And is this just for developers, or could it impact regular users?

John: Good points, Lila. Currently, this flaw primarily affects developers who installed the Gemini CLI tool after its launch. If you’re using it, check your version—anything below 0.1.14 is vulnerable. For regular users, if you’re not tinkering with command-line AI tools, you’re probably safe. But it’s a reminder that AI integrations, even in tools like Gmail summaries or Workspace, can have similar risks. Recent discussions on X (formerly Twitter) from verified accounts like @GoogleAI and security experts emphasize updating promptly. For example, trending threads highlight how this patch uses better input sanitization to block injections.

John: Right now, the tech community is buzzing about this. Reputable outlets like BleepingComputer and Dataconomy have covered how the bug allowed hidden code execution via README files. It’s not isolated—similar prompt injection issues have popped up in other Gemini features, like email summaries in G-Suite, where attackers could craft fake security alerts to phish users.

- Key Fix Details: The patch restricts automatic processing of potentially malicious inputs.

- User Advice: Run ‘gemini –version’ in your terminal and update via pip if needed.

- Broader Impact: This has sparked conversations on X about AI security, with hashtags like #AIPromptInjection trending among devs.

Looking Ahead: Future Implications

John: Looking ahead, this incident could shape how AI tools are developed. We might see more robust security measures, like advanced input validation or user confirmations before executing AI-suggested commands. Google is likely to integrate lessons from this into future updates for Gemini and other products. On the horizon, expect more research into prompt injection defenses, possibly involving machine learning to detect anomalies in real-time.

Lila: That makes sense, but what about other AI tools? Could this happen with ChatGPT or something else?

John: Spot on, Lila. Looking ahead, yes—prompt injection is a common risk across LLMs (Large Language Models). Tools like OpenAI’s CLI equivalents could face similar issues if not guarded. The future might bring industry standards for AI security, with organizations like Mozilla’s 0din platform continuing to bounty-hunt these bugs. For users, staying informed via official channels will be key.

Why This Matters for Everyday Tech Users

John: Even if you’re not a developer, this story shows how AI is weaving into our daily tools, from email to coding. It’s exciting but comes with risks. By understanding these flaws, we can all be smarter about tech safety.

Lila: Totally! So, John, any tips for beginners like me to avoid these pitfalls?

John: Sure thing. Always update your software, be cautious with unknown files, and use antivirus tools. Follow verified sources for news—don’t fall for hype on social media without checking facts.

John’s Reflection: Reflecting on this, it’s fascinating how fast AI evolves, but it reminds us that security must keep pace. This patch is a win for Google, showing responsiveness, yet it underscores the need for ongoing vigilance in AI tech. Overall, it’s a step toward safer innovation.

Lila’s Takeaway: Wow, I learned that even cool AI tools need patches like our phones do. My big takeaway: Stay updated and question inputs—AI is smart, but humans have to be smarter!

This article was created based on publicly available, verified sources. References:

- Tracebit finds major vulnerability in Google Gemini CLI tool

- Google Patches Gemini CLI Flaw Enabling Prompt Injection Attacks

- Gemini CLI Bug Let Hackers Run Hidden Code Via README Files – Dataconomy

- Flaw in Gemini CLI AI coding assistant allowed stealthy code execution

- A flaw in Google’s new Gemini CLI tool could’ve allowed hackers to exfiltrate data | IT Pro